By Vienna Alexander, Marketing Content Professional, Marvell

In a recent Forbes and Statista ranking, Marvell was named as one of America’s Best Midsize Employers for 2026.

The America’s Best Employers ranking, now in its eleventh year, recognizes organizations that have demonstrated an outstanding commitment to fostering collaborative workplaces. To present the ranking, Forbes partnered with established market research firm Statista.

The list is based on over 217,000 U.S. employee independent survey responses from companies with a national workforce of at least 1,000 people. To further categorize the results, companies with between 1,000 and 5,000 employees were deemed midsized, while companies with more than 5,000 people were referred to as large employers. The survey evaluates areas of Atmosphere and Development; Salary and Wage; Company Image; Culture; Working Conditions; and Workplace Environment.

By Alua Suleimenova, Senior Sustainability Program Manager, Marvell

This blog was originally posted in Semiconductor Engineering.

The semiconductor industry is the bedrock of modern technology, enabling everything from AI and cloud computing to electric vehicles. Yet, this critical sector is also one of the most resource-intensive globally, with a substantial dependency on water. A single fabrication plant can demand up to 10 million gallons of water daily, comparable to the consumption of a city with 300,000 residents. Much of this water is, of course, reused and recycled through sophisticated systems. This immense water usage, particularly the requirement for ultrapure water for processes like cleaning and etching, makes consistent access to high-quality water a non-negotiable for operational reliability and business continuity. The new insights report “Ripple Effects: Water Risk and Resilience Across the Semiconductor Value Chain” provides the first global baseline of water risk hotspots for the semiconductor sector, assessing water risks across 140 facilities across 89 water basins to inform future risk mitigation strategies.

By Khurram Malik, Senior Director of Marketing, Custom Cloud Solutions, Marvell

Can AI beat a human at the game of twenty questions? Yes.

And can a server enhanced by CXL beat an AI server without it? Yes, and by a wide margin.

While CXL technology was originally developed for general-purpose cloud servers, the technology is now finding a home in AI as a vehicle for economically and efficiently boosting the performance of AI infrastructure. To this end, Marvell has been conducting benchmark tests on different AI use cases.

In December, Marvell, Samsung and Liqid showed how Marvell® StructeraTM A CXL compute accelerators can reduce the time required for conducting vector searches (for analyzing unstructured data within documents) by more than 5x.

In February, Marvell showed how a trio of Structera A CXL compute accelerators can process more queries per second than a cutting-edge server CPU and at a lower latency while leaving the host CPU open for different computing tasks.

Today, this blog post will show how Structera CXL memory expanders can boost performance of inference tasks.

AI and Memory Expansion

Unlike CXL compute accelerators, CXL memory expanders do not contain additional processing cores for near-memory computing. Instead, they supersize memory capacity and bandwidth. Marvell Structera X, released last year, provides a path for adding up to 4TB of DDR5 DRAM or 6TB of DDR4 DRAM to servers (12TB with integrated LZ4 compression) along with 200GB/second of additional bandwidth. Multiple Structera X modules, moreover, can be added to a single server; CXL modules slot into PCIe ports rather than the more limited DIMM slots used for memory.

By Vienna Alexander, Marketing Content Professional, Marvell

In the Product of the Year Awards, Electronic Design News (EDN) named Marvell as the winner of the Interconnects category for its 3nm 1.6T PAM4 Interconnect Platform, known as Ara. Ara is the industry’s first 3nm PAM4 DSP specializing in bandwidth, power efficiency and integration for AI and data center scale-out accelerated infrastructure.

These awards are a 50-year tradition that recognizes outstanding products that demonstrate significant technological advancement, especially innovative design, or a substantial improvement in price or performance. Over 100 products were evaluated spanning 13 categories to determine the winners.

The Ara 3nm 1.6T PAM4 DSP integrates eight 200G electrical lanes and eight 200G optical lanes in a compact, standardized module form factor. Ara sets a new standard in optical interconnect technology, integrating advanced laser drivers and signal processing into a singular device, thereby reducing power per bit. With the product, system design is simplified across entire AI data center network stacks. Power consumption is also reduced, enabling denser optical connectivity and faster deployment of AI clusters.

Ara has also received other recognitions, demonstrating Marvell leadership in optical DSPs and the interconnect realm.

By Khurram Malik, Senior Director of Marketing, Custom Cloud Solutions, Marvell

While CXL technology was originally developed for general-purpose cloud servers, it is now emerging as a key enabler for boosting the performance and ROI of AI infrastructure.

The logic is straightforward. Training and inference require rapid access to massive amounts of data. However, the memory channels on today’s XPUs and CPUs struggle to keep pace, creating the so-called “memory wall” that slows processing. CXL breaks this bottleneck by leveraging available PCIe ports to deliver additional memory bandwidth, expand memory capacity and, in some cases, integrate near-memory processors. As an added advantage, CXL provides these benefits at a lower cost and lower power profile than the usual way of adding more processors.

To showcase these benefits, Marvell conducted benchmark tests across multiple use cases to demonstrate how CXL technology can elevate AI performance.

In December, Marvell and its partners showed how Marvell® StructeraTM A CXL compute accelerators can reduce the time required for vector searches used to analyze unstructured data within documents by more than 5x.

Here’s another one: CXL is deployed to lower latency.

Lower Latency? Through CXL?

At first glance, lower latency and CXL might seem contradictory. Memory connected through a CXL device sits farther from the processor than memory connected via local memory channels. With standard CXL devices, this typically results in higher latency between CXL memory and the primary processor.

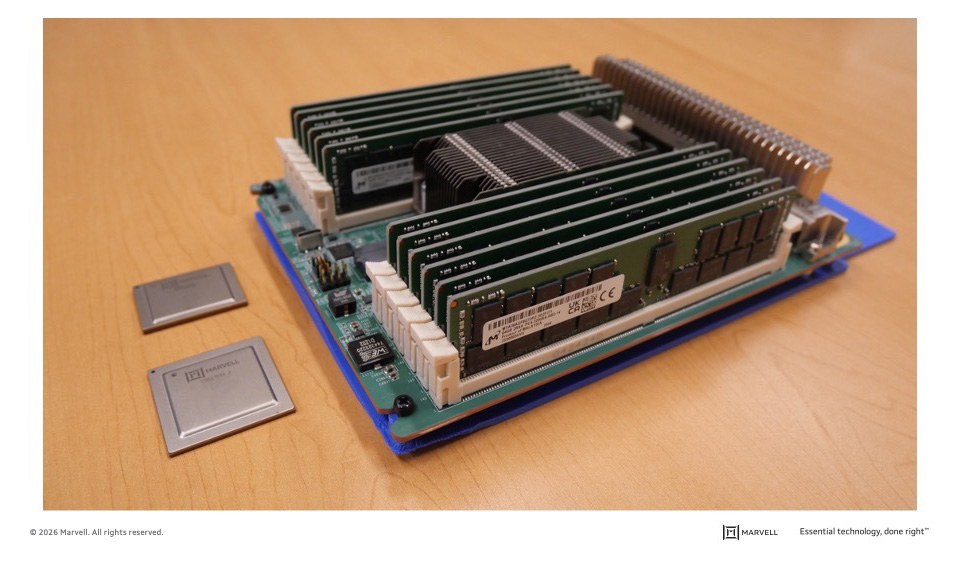

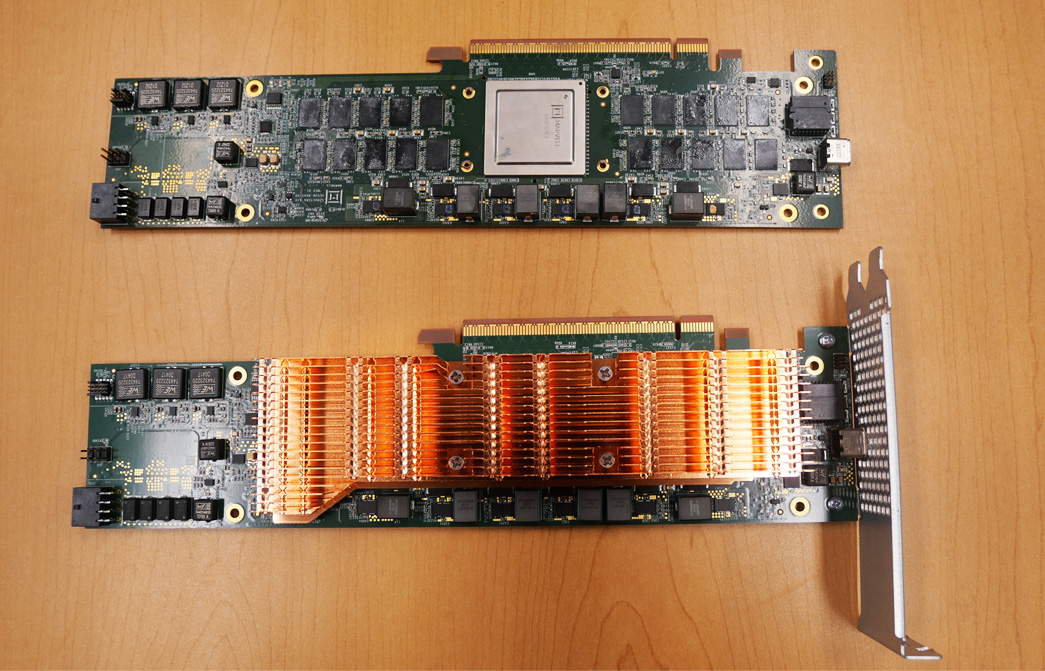

Marvell Structera A CXL memory accelerator boards with and without heat sinks.