- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

Connectivity for AI in the Million XPU Era

AI has been a huge catalyst for the adoption of connectivity in networks.

Already, operators are deploying AI data centers with over 200,000 GPUs—and they’re moving even faster towards 1 million XPUs. Driven by the increase in bandwidth and the growing size and number of clusters needed for AI applications, we are in a massive growth market for interconnect solutions. Accordingly, the optical interconnect global market has doubled since 2020 to nearly 20 billion dollars in 2025, and it is expected to double again by 2030, with an industry CAGR (Compound Annual Growth Rate) of about 18%.1

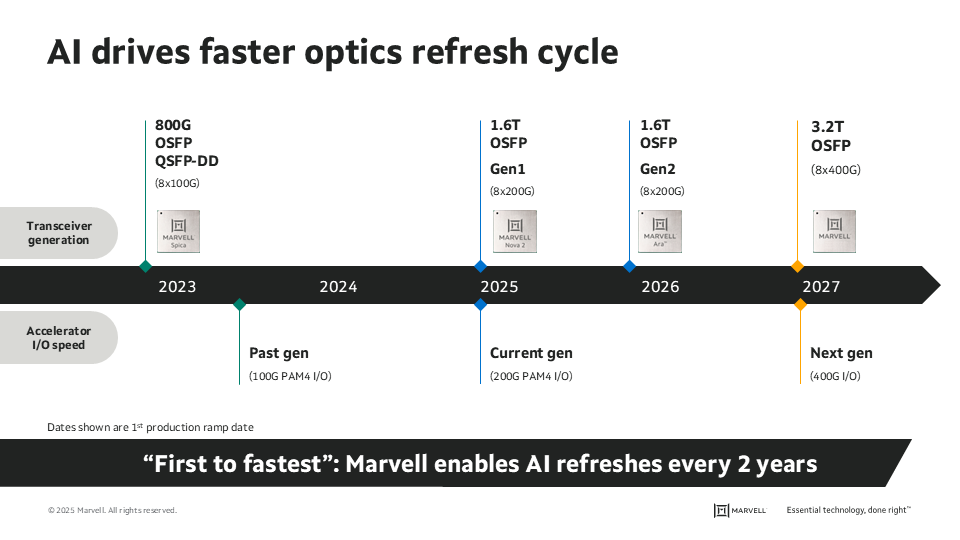

AI also jumpstarted the transition to higher bandwidth optics. The IO bandwidth of traditional compute servers lags behind the network IO bandwidth by at least one generation. AI server bandwidth, however, leaps forward by one generation. This transition triggered much higher demand for higher bandwidth connectivity, as well as the creation of new innovations at an accelerated pace.

Marvell has been continually releasing new innovations in optical connectivity at an accelerated pace, enabling the scaling of AI applications.

Challenges in Supporting Modern AI Workloads at Scale

Especially as AI moves to network edges, it requires the efficient transfer of data, which presents a lot of challenges in connectivity/networking.

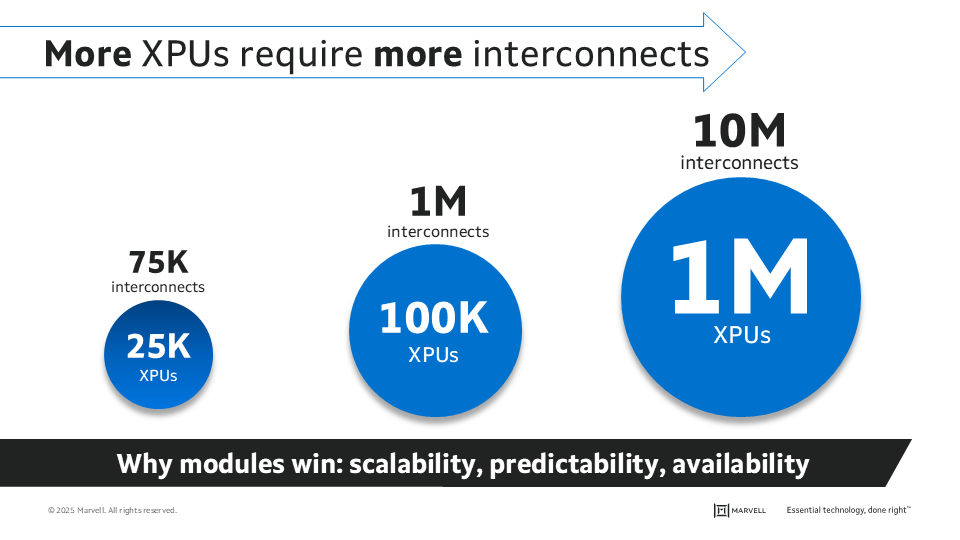

Larger amounts of GPUs that work together will need a bigger fabric to connect them. A cluster with 100,000 GPUs may need 500,000 interconnects along with thousands of servers and switches. A million GPUs could need 10 million interconnects but collectively span several kilometers.2 Power usage could approach a gigawatt—just one of several concerns that enterprises are facing in supporting modern AI workloads.

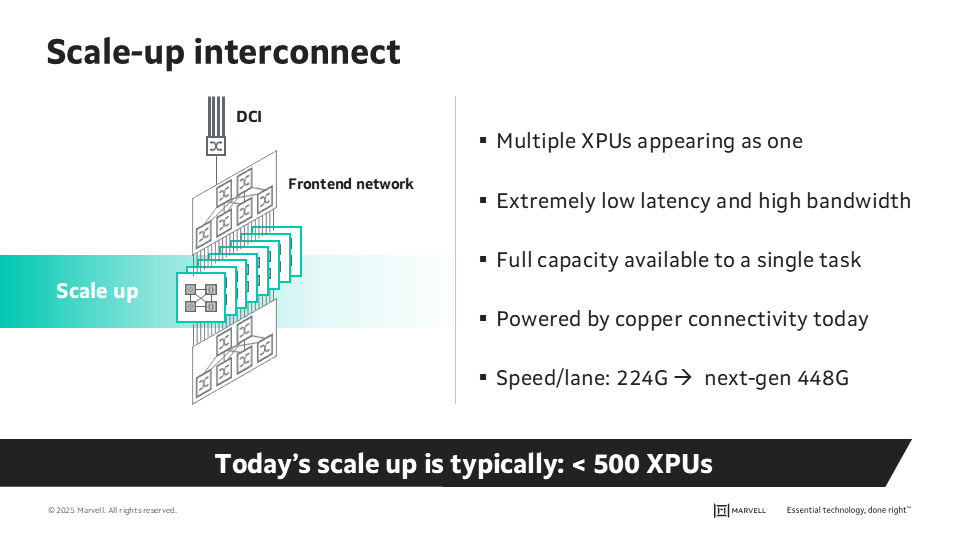

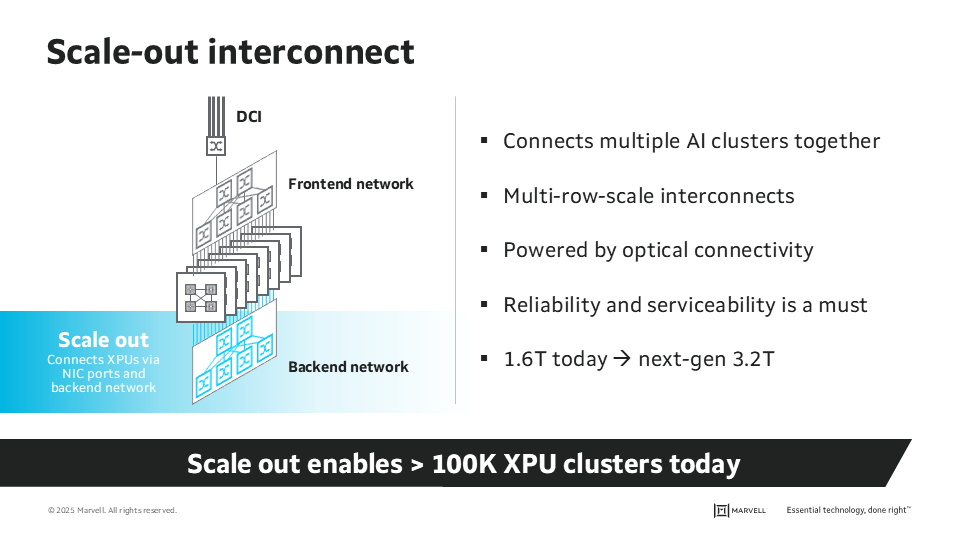

Looking at the system that supports AI, there are two separate and connected networks: scale up, which enhances the capacity of a single server or system by combining all the resources together, and scale out, which brings these servers to the network.

In scale up, the main challenge will be latency, followed by power requirements. Scale up is a new market for connectivity, so it’s optimistic that these concerns will be remedied in the next few years through new innovations, such as adoption of ultra-low-latency networking infrastructure, high density co-packaged optics, and low power direct I/O interfaces.

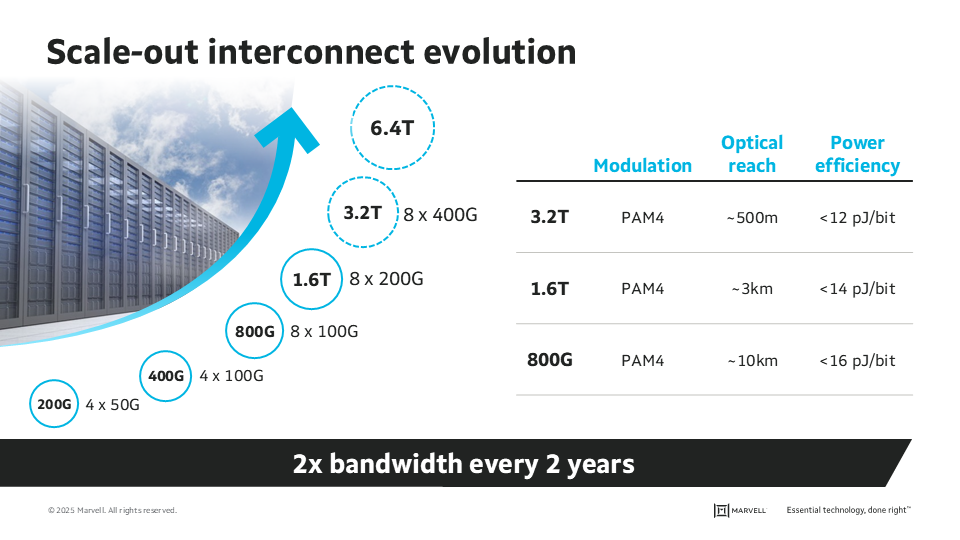

In scale out, the big challenge is scalability. There are potential corresponding innovations, such as employing coherent-lite and high bandwidth 400G optics, along with new modulation schemes that are needed to support these use cases.

In the evolution of scale out, progress is accelerating to match these new demands shaped by AI. Instead of doubling the bandwidth every three years, Marvell is doubling it every two years.3

Enabling Next-generation AI Through Connectivity Solutions

Marvell is a part of the ecosystem of partners enabling next-generation AI, working with them to make it a reality. This budding industry holds lots of opportunities, and Marvell is bringing innovations like low-latency networks, higher bandwidth optics, and coherent-lite technology to enable multi-site, high bandwidth, large-scale networks.

AI fundamentally relies on technologies like optical transceivers and active electrical cables (AECs) for most connections three meters or longer, which are especially necessary as the node sizes increase as AI networks expand.

In the next generation of AI, clusters are ramping up to 1 million XPUs. At this level, requirements emerge like fabrics with high bandwidth and low latency that are simultaneously cost and power efficient. This, in turn, again fuels new connectivity solutions and widespread innovations. At the board level, new generations of SerDes IO are being deployed and developed at a faster cadence. At the cluster level, optimized electrical and optical solutions for each different application scenario are becoming available. Across data center locations, Marvell is delivering coherent transmission technology that originally was reserved for long-haul telecom but is now being used on data center campuses.

All of these innovations have become enablers for next-generation AI. It’s not about who has the best accelerator but who also has the best connectivity solutions. With the number and size of AI clusters growing rapidly, operators need a flexible and interchangeable solution that scales to support their needs. A vast network of ecosystem partners that support a pluggable ecosystem is the go-to solution for large scale networks.

LightCounting, April Market Forecast, April 2025

Marvell Accelerated Infrastructure for the AI Era Day, April 2024

Lenin Patra, Marvell presentation at OFC, March 2025

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: AI, AI infrastructure

Recent Posts

- The Golden Cable Initiative: Enabling the Cable Partner Ecosystem at Hyperscale Speed

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

Archives

Categories

- 5G (10)

- AI (52)

- Cloud (24)

- Coherent DSP (12)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (77)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (46)

- Optical Modules (20)

- Security (6)

- Server Connectivity (37)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (48)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact