- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

Co-packaged Optics: Powering the Next Wave of AI Data Center Innovation

Co-packaged optics (CPO) will play a fundamental role in improving the performance, efficiency, and capabilities of networks, especially the scale-up fabrics for AI systems.

Realizing these benefits will also require a fundamental transformation in the way computing and switching assets are designed and deployed in data centers. Marvell is partnering with equipment manufacturers, cable specialists, interconnect companies and others to ensure the infrastructure for delivering CPO will be ready when customers are ready to adopt it.

The Trends Driving CPO

AI’s insatiable appetite for bandwidth and the physical limitations of copper are driving demand for CPO. Network bandwidth doubles every two to three years, and the reach of copper reduces meaningfully as bandwidth increases. Meanwhile, data center operators are clamoring for better performance per watt and rack.

CPO ameliorates the problem by moving the conversion of electrical to optical from an external slot on the faceplate to a position as close to the ASIC as possible. This shortens the copper trace, which may improve the link budget enough to remove digital signal processor (DSP) or retimer functionality, thereby reducing the overall power per bit, a key metric in AI datacenter management. Achieving commercial viability and scalability, however, has taken years of R&D across the ecosystem, and the benefits will likely depend on the use cases and applications where CPO is deployed.

While analyst firms such as LightCounting predict that optical modules will continue to constitute the majority of optical links inside data centers through the decade,1 CPO will likely become a meaningful segment.

The CPO Server Tray

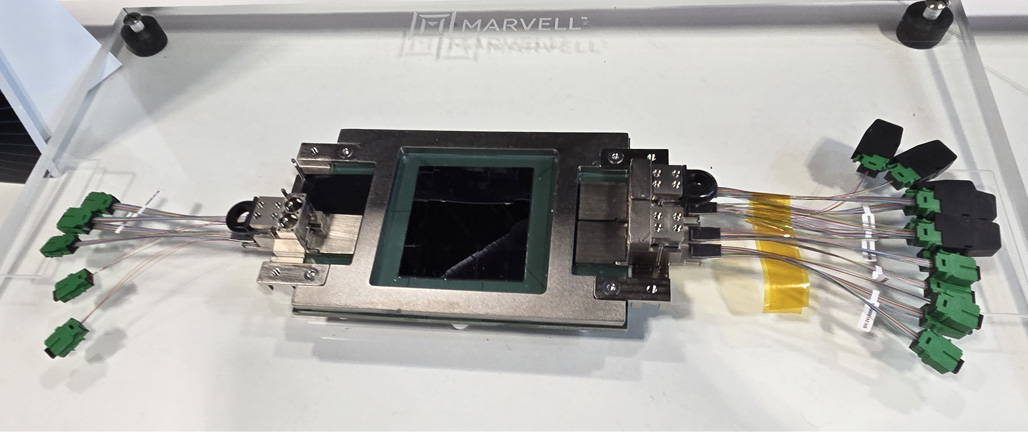

The image below shows a conceptualized AI compute tray with CPO developed with products from SENKO Advanced Components and Marvell. The design contains room for four XPUs and up to 102.4 Tbps of bandwidth delivered through 1024 optical fibers, all in a 1U tray. The density and reach enabled by CPO opens the door to scale-up domains far beyond what is possible with copper alone..

When asked at recent trade shows how many fibers the tray contained, most attendees guessed around 250 fibers. The actual number is 1,152 fibers.

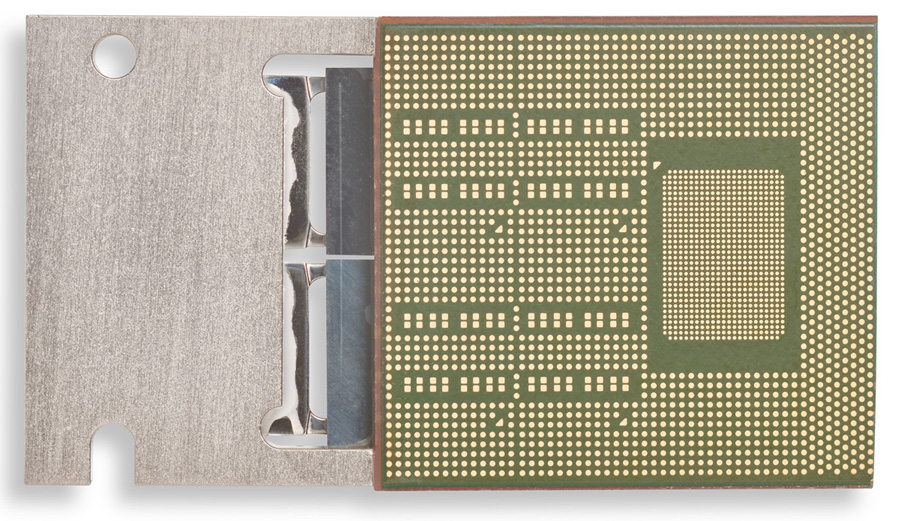

A close-up of the XPU shows another aspect of the design: serviceability. Each XPU is connected to four Marvell 6.4T light engines for opto-electric conversions. The light engines interface with two 36-fiber detachable Metallic PIC Couplers (MPCs) from SENKO. The MPCs, identifiable by their integrated handles, are engineered for precise and repeatable alignment of microlenses and other optical components that transmit the light into the network. With a total of 32 MPCs per compute tray, and more than 36,000 fibers, repeatability and reliability are absolutely critical.

The Marvell 6.4Tbps light engine (top) converts electrical signals to optical ones. Two 36-fiber metallic photonic integrated circuits from Senko (bottom, side of image) are attached on top of it to link the XPU to the network. The modularity of the system results in a more robust, scalable network.

Heat and Space

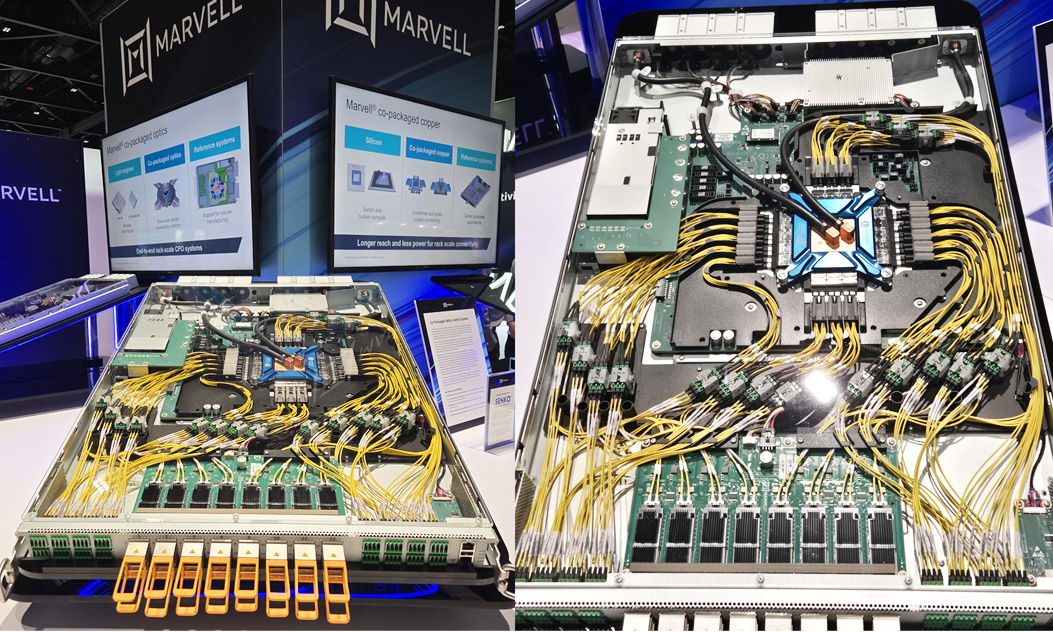

Marvell, SENKO, Jabil, and Mikros Technologies also recently unveiled a reference design of a data center CPO switch.

The ethernet switch ASIC—the large semiconductor in the center of the image—is surrounded by 16 light engine tiles and 1,152 fibers (128 laser fibers and 1,024 data fibers). The light engines are driven by 16 laser modules, which are connected to the faceplate for better serviceability. Positioning laser modules at the faceplate also keeps them cooler, improving the laser reliability.

The CPO switch reference design includes a cold-plate cooling system from Mikros Technologies (the copper plate with the blue cover), which helps reduce the height of the system.

Cold-plate liquid cooling from Mikros Technologies features a low-profile design that maintains a system height of 1OU (see the copper plate with a blue mounting bracket in the center of the photo on the right). The active cooling height is 3.6mm, and the overall height, including barb fittings and the mounting bracket, totals one inch. In contrast, conventional air cooling would require a chassis that is two to three times thicker. Put simply, transitioning to liquid cooling enables significant densification of AI data center racks.

This increased rack density is driven by Mikros Technologies’ MikroMatrix™ platform, a cold plate design that employs a matrix array of microchannels oriented perpendicular to the surface. This unique design dramatically increases the contact area within the cold plate for better heat dissipation. The result is superior heat transfer performance, targeted cooling of hot spots, increased energy efficiency, a larger thermal budget, and improved overall system reliability, far surpassing the capabilities of traditional finned and jet impingement designs.

See a video of the system: Co-Packaged Optics (CPO) Demo Led by Chris McCormick at ECOC

Toward a CPO Ecosystem

It’s become apparent on this CPO journey that a broad range of skill sets are required. Experts in power delivery, cooling, cable management, connectors, optics and other areas will need to work in concert to build dense, cutting-edge systems and develop technologies to make deploying and servicing these unique systems a repeatable, “simple” process. This challenge will only grow as scale-up systems multiply from tens of processors to hundreds and even thousands.

The scope and complexity of the AI infrastructure go far beyond what individual companies can do on their own. Expect to hear more about how Marvell is building an ecosystem to accelerate this process.

1. LightCounting, May 2025.

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: AI, AI infrastructure, networking for AI training workloads, Networking, data center interconnect, Optical Connectivity, Co-packaged Optics, CPO

Recent Posts

- Co-Packaged Copper Extending Its Reach Inside Scale-Up Networks

- Active Copper Cables: A New Class of Rack Interconnects for Further Optimizing AI

- Custom Silicon: A Sea Change for Semiconductors

- Marvell Named to America’s Most Responsible Companies 2026 List

- 5 Times More Queries per Second: What CXL Compute Accelerators Can Do for AI

Archives

Categories

- 5G (10)

- AI (48)

- Cloud (21)

- Coherent DSP (11)

- Company News (107)

- Custom Silicon Solutions (9)

- Data Center (71)

- Data Processing Units (21)

- Enterprise (24)

- ESG (10)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (45)

- Optical Modules (19)

- Security (6)

- Server Connectivity (34)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (47)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact