By Michael Kanellos, Head of Influencer Relations, Marvell

This story was also featured in Electronic Design

Some technologies experience stunning breakthroughs every year. In memory, it can be decades between major milestones. Burroughs invented magnetic memory in 1952 so ENIAC wouldn’t lose time pulling data from punch cards1. In the 1970s DRAM replaced magnetic memory while in the 2010s, HBM arrived.

Compute Express Link (CXL) represents the next big step forward. CXL devices essentially take advantage of available PCIe interfaces to open an additional conduit that complements the overtaxed memory bus. More lanes, more data movement, more performance.

Additionally, and arguably more importantly, CXL will change how data centers are built, operate and work. It’s a technology that will have a ripple effect. Here are a few scenarios on how it can potentially impact infrastructure:

1. DLRM Gets Faster and More Efficient

Memory bandwidth—the amount of memory that can be transmitted from memory to a processor per second—has chronically been a bottleneck because processor performance increases far faster and more predictably than bus speed or bus capacity. To help contain that gap, designers have added more lanes or added co-processors.

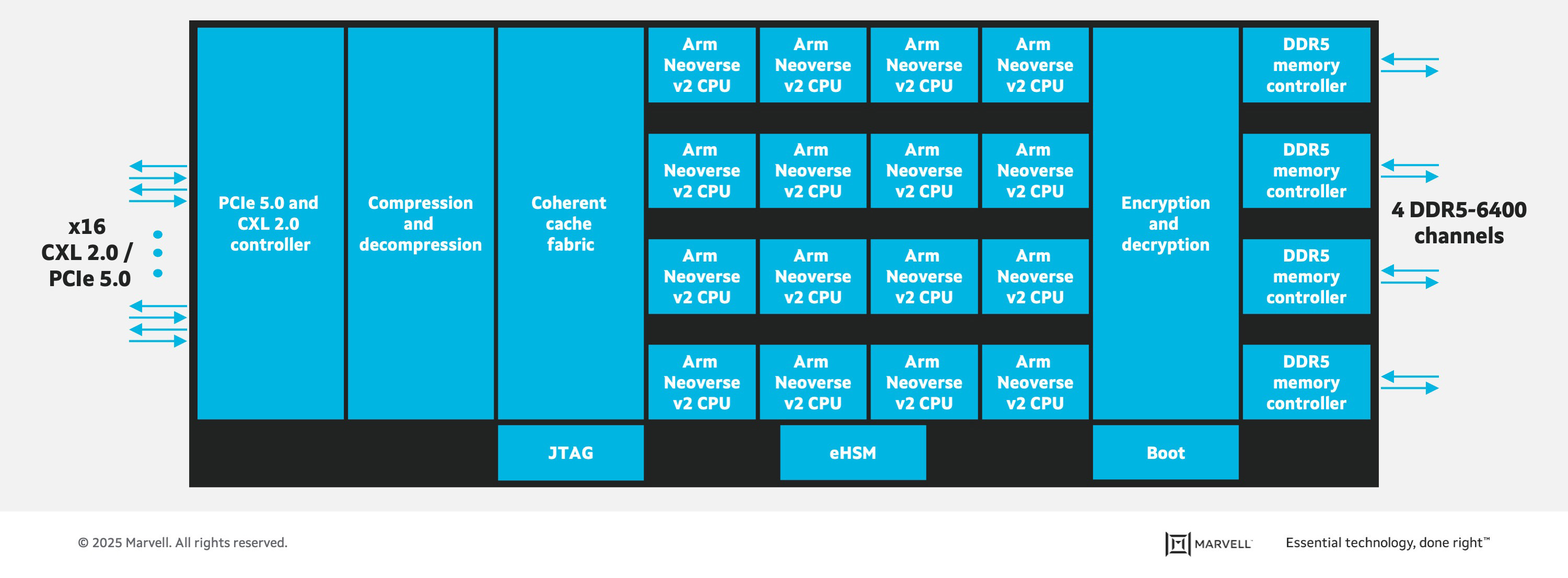

Marvell® StructeraTM A does both. The first-of-its-kind device in a new industry category of memory accelerators, Structera A sports 16 Arm Neoverse N2 cores, 200 Gbps of memory bandwidth, up to 4TB of memory and consumes under 100 watts along with processing fabric and other Marvell-only technology. It’s essentially a server-within-a-server with outsized memory bandwidth for bandwidth-intensive tasks like inference or deep learning recommendation models (DRLM). Cloud providers need to program their software to offload tasks to Structera A, but doing so brings a number of benefits.

Take a high-end x86 processor. Today it might sport 64 cores, 400 Gbps of memory bandwidth, up to 2TB of memory (i.e. four top-of-the-line 512GB DIMMs), and consume a maximum 400 watts for a data transmission power rate 1W per GB/sec.

By Nicola Bramante, Senior Principal Engineer

Transimpedance amplifiers (TIAs) are one of the unsung heroes of the cloud and AI era.

At the recent OFC 2025 event in San Francisco, exhibitors demonstrated the latest progress on 1.6T optical modules featuring Marvell 200G TIAs. Recognized by multiple hyperscalers for its superior performance, Marvell 200G TIAs are becoming a standard component in 200G/lane optical modules for 1.6T deployments.

TIAs capture incoming optical signals from light detectors and transform the underlying data to be transmitted between and used by servers and processors in data centers and scale-up and scale-out networks. Put another way, TIAs allow data to travel from photons to electrons. TIAs also amplify the signals for optical digital signal processors, which filter out noise and preserve signal integrity.

And they are pervasive. Virtually every data link inside a data center longer than three meters includes an optical module (and hence a TIA) at each end. TIAs are critical components of fully retimed optics (FRO), transmit retimed optics (TRO) and linear pluggable optics (LPO), enabling scale-up servers with hundreds of XPUs, active optical cables (AOC), and other emerging technologies, including co-packaged optics (CPO), where TIAs are integrated into optical engines that can sit on the same substrates where switch or XPU ASICs are mounted. TIAs are also essential for long-distance ZR/ZR+ interconnects, which have become the leading solution for connecting data centers and telecom infrastructure. Overall, TIAs are a must have component for any optical interconnect solution and the market for interconnects is expected to triple to $11.5 billion by 2030, according to LightCounting.

Copyright © 2025 Marvell, All rights reserved.