- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

A Deep Dive into the Copper and Optical Interconnects Weaving AI Clusters Together

This article is part three in a series on talks delivered at Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

Twenty-five years ago, network bandwidth ran at 100 Mbps, and it was aspirational to think about moving to 1 Gbps over optical. Today, links are running at 1 Tbps over optical, or 10,000 times faster than cutting edge speeds two decades ago.

Another interesting fact. “Every single large language model today runs on compute clusters that are enabled by Marvell’s connectivity silicon,” said Achyut Shah, senior vice president and general manager of Connectivity at Marvell.

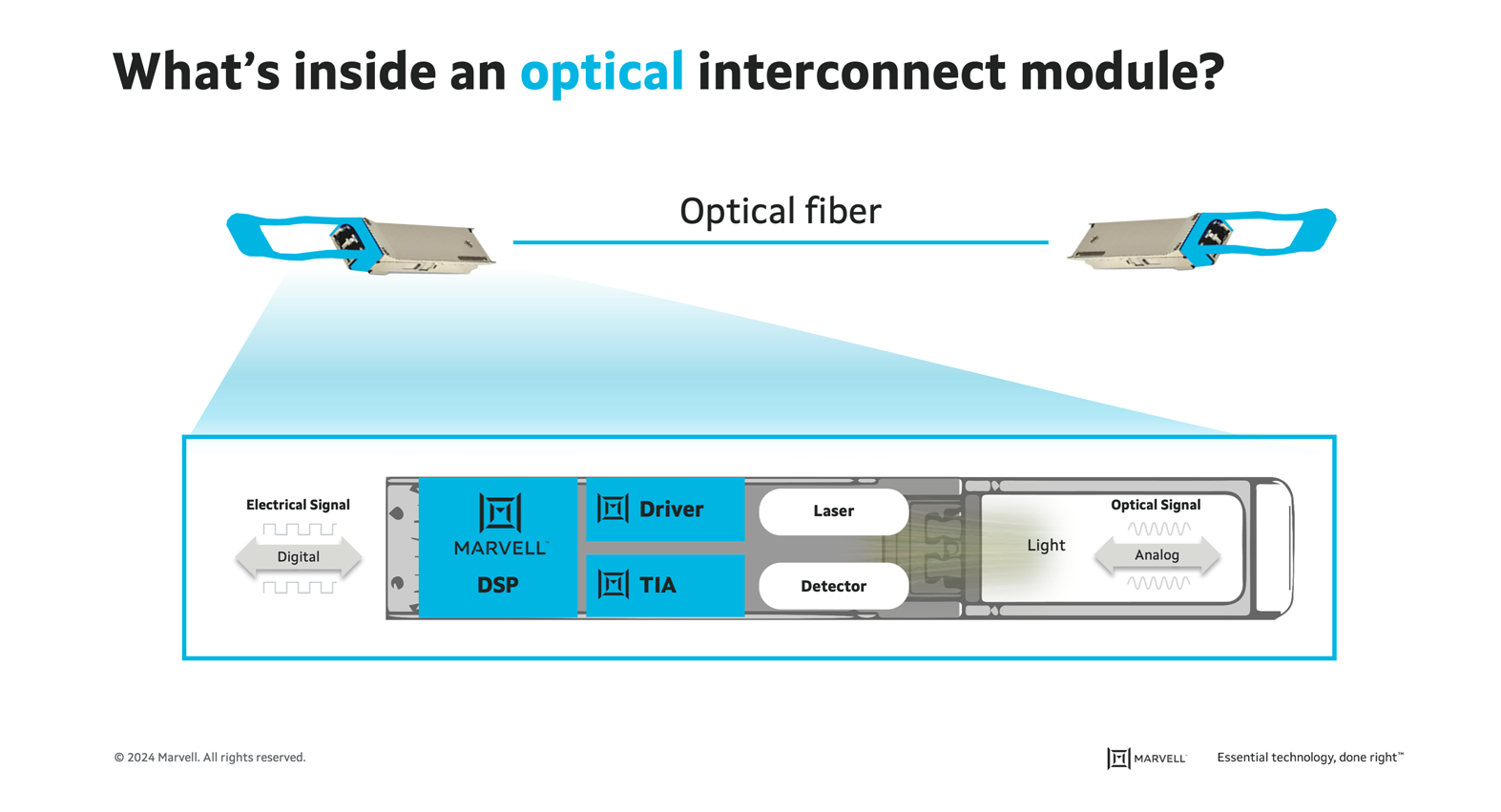

To keep ahead of what customers need, Marvell continually seeks to boost capacity, speed, and performance of the digital signal processors (DSPs), transimpedance amplifiers or TIAs, drivers, firmware and other components inside interconnects. It’s an interdisciplinary endeavor involving expertise in high frequency analog, mixed signal, digital, firmware, software and other technologies. The following is a map to the different components and challenges shaping the future of interconnects and how that future will shape AI.

Inside the Data Center

From a high level, optical interconnects perform the task their name implies: they deliver data from one place to another while keeping errors from creeping in during transmission. Another important task, however, is enabling data center operators to scale quickly and reliably.

“When our customers deploy networks, they don’t start deploying hundreds or thousands at a time,” said Shah. “They have these massive data center clusters—tens of thousands, hundreds of thousands and millions of (computing) units—that all need to work and come up at the exact same time. These are at multiple locations, across different data centers. The DSP helps ensure that they don’t have to fine tune every link by hand.”

Training a large language model (LLM) on a large cluster often takes weeks or months. If a link goes down, even for an instant, the work in process collapses, which equals lost revenue and profit. Diagnostics and telemetry capability in the DSP can detect failures in advance.

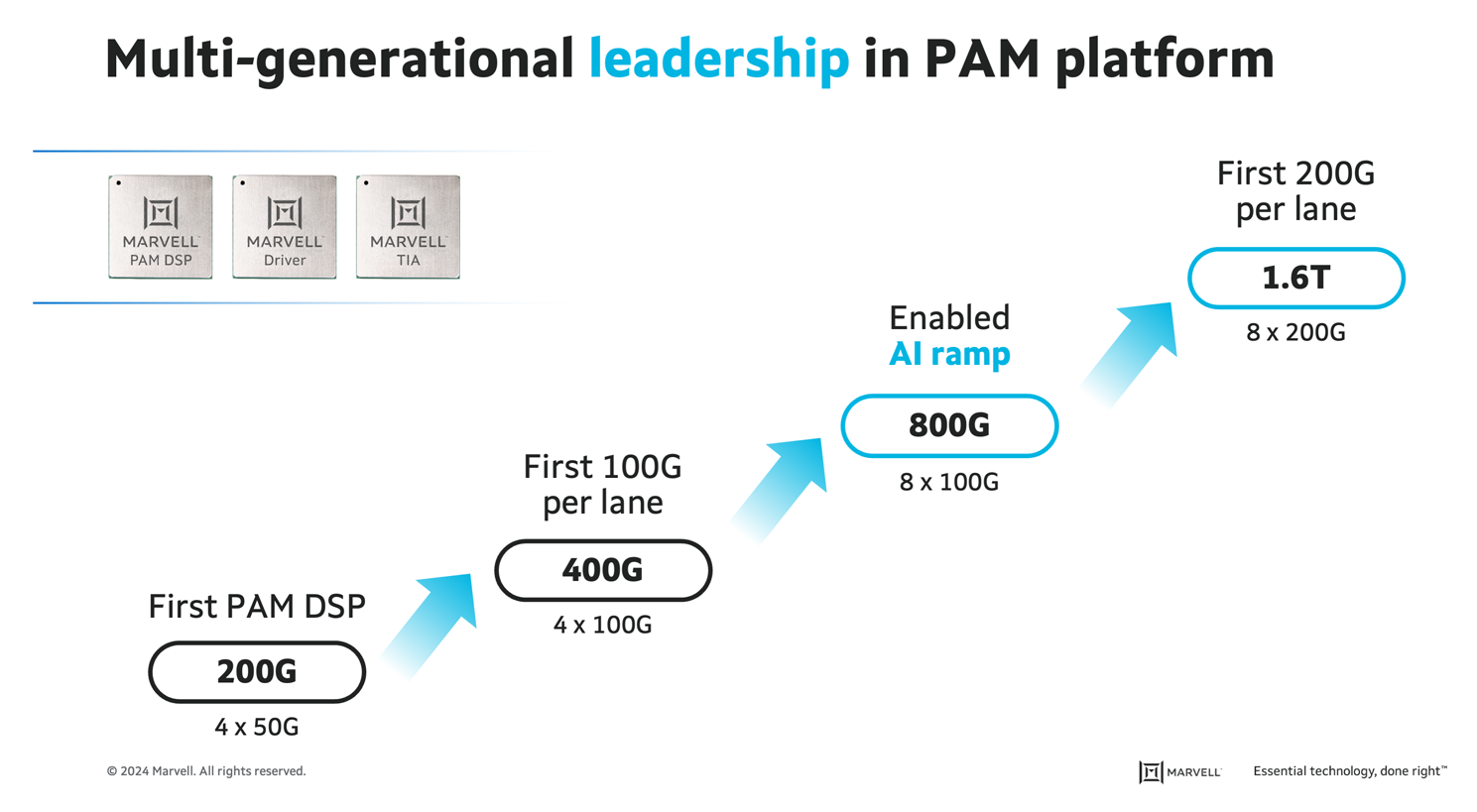

Pluggable optical modules running on PAM4 DSPs have become fundamental for server-to-switch and switch-to-switch connectivity: the vast majority of connections from 5 meters to 2 kilometers inside data centers or campuses today are forged with PAM4 DSP-based optical modules. Bandwidth has doubled roughly every two years with power per bit falling by approximately 30% per generation. Last year, Marvell announced the industry’s first 1.6T PAM DSP and this year announced the first 1.6T DSP with 200G electrical and optical lanes. Work on 3.2T is underway.

Traditional PAM4 DSPs are also being complemented with new classes of devices. Integrated DSPs such as Marvell Perseus can reduce overall power consumption for short-to-medium reaches.

While DSPs are the principal device inside these modules, they are complemented by transimpedance amplifiers and optical drivers that amplify and clean signals during transmission and reception. Silicon photonics devices—which integrate hundreds of components into a single chip—will also begin to percolate into the data center. (See next blog in the series.)

Inside the Rack

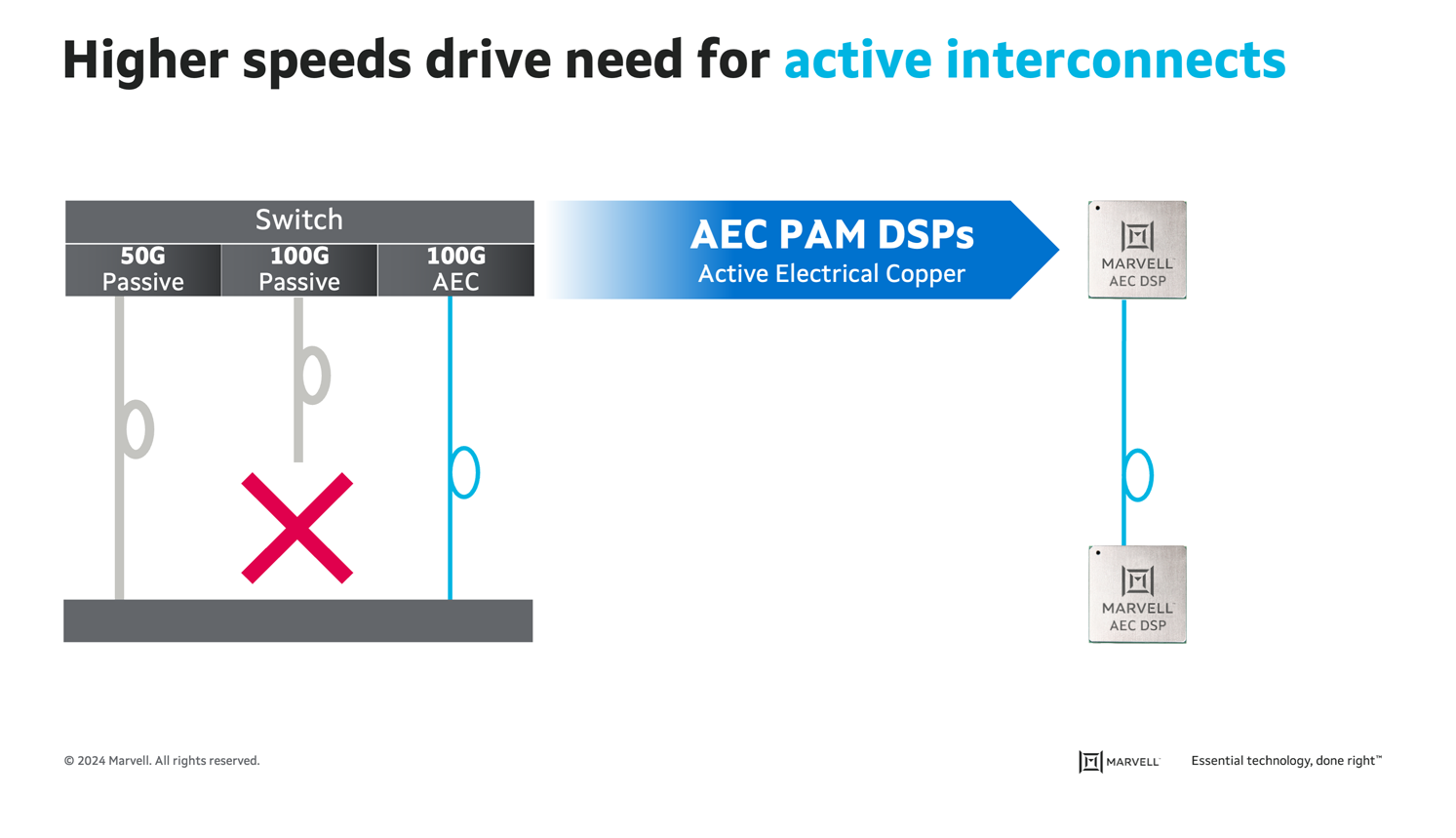

Passive copper connections remain the norm for short interconnects connecting servers to switches within cloud data center racks or for connecting xPUs to each other in AI clusters. Inexpensive and reliable, passive copper remains the main technology for connections 5 meters or less. The shift from 50 Gbps to 100 Gbps connection speeds, however, changes the picture. At 100 Gbps, ordinary passive cables can’t reliably send signals at even these modest distances. Thicker cables would be required, but they introduce bulk, cost and installation and management headaches.

Active electrical cable (AEC) technology addresses this problem by inserting DSPs inside copper connections for greater bandwidth and speed over longer distances. PCIe retimers, an emerging class of products that scale connections between AI accelerators, GPUs, CPUs, and other components inside servers, work on the same principle: adding DSP technology elevates the performance of short-reach connections while ensuring a desired level of data integrity.

Marvell is collaborating with Amphenol, Broadex, Molex, TE Connectivity, and others on AEC solutions. Marvell is also shipping DSPs for active copper now to hyperscale customers.

Between Data Centers

With the number of data centers exploding globally, demand for longer distance connections is on the rise to connect them together. Marvell recently announced Orion, its 800 Gbps Coherent DSP for links up to 500 kilometers long, as well as COLORZ® 800, a data center interconnect (DCI) pluggable module based on Orion. Orion-based modules dramatically reduce the cost and power required for these links while reducing installation costs.

At the other end of the spectrum, AI is driving demand for entirely new types of interconnect technology. These large clusters need a flat, low-latency network that spans across a larger building or multiple buildings within the same campus. As a result, interconnects must grow from less than 2 km to approximately 10-20 km in reach, which means they require the distance of coherent technology, but the latency and power of a PAM link. Coherent technology tailored for inside the data center will be one answer.

The demand for AI connectivity is insatiable, and Marvell’s complete interconnect portfolio is poised to go the distance and give customers the tools they need to scale AI networks today and in the future.

To read the first two blogs in this series, go to The AI Opportunity and Scaling AI Means Scaling Interconnects.

# # #

Marvell and the M logo are trademarks of Marvell or its affiliates. Please visit www.marvell.com for a complete list of Marvell trademarks. Other names and brands may be claimed as the property of others.

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events, results or achievements. Actual events, results or achievements may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: AI, AI infrastructure, Optical Interconnect, Data Center Interconnects, Data Center Interconnect Solutions, Optical Connectivity, PAM4 DSP-based Optical Connectivity

Recent Posts

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

- Improving AI Through CXL Part II: Lower Latency

Archives

Categories

- 5G (10)

- AI (51)

- Cloud (23)

- Coherent DSP (11)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (76)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (45)

- Optical Modules (20)

- Security (6)

- Server Connectivity (36)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (47)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact