By Vienna Alexander, Marketing Content Professional, Marvell

Marvell was announced as the top Connectivity winner in the 2025 LEAP Awards for its 1.6 Tbps LPO Optical Chipset. The judges' remarks noted that “the value case writes itself—less power, reduced complexity but substantial bandwidth increase.” Marvell earned the gold spot, reaffirming the industry-leading connectivity portfolio it is continually building.

The LEAP (Leadership in Engineering Achievement Program) Awards recognize best-in-class product and component designs across 11 categories with the feedback of an independent judging panel of experts. These awards are published by Design World, the trade magazine that covers design engineering topics in detail.

This chipset, combining a 200G/lane TIA (transimpedance amplifier) and laser drivers, enables 800G and 1.6T linear-drive pluggable optics (LPO) modules. LPO modules offer longer reach than passive copper, at low power and low latency, and are designed for scale-up compute-fabric applications.

By Winnie Wu, Senior Director Product Marketing at Marvell

Welcome to the beginning of row-scale computing.

At the 2025 OCP Global Summit, Marvell and Infraeo will showcase a breakthrough in high-speed interconnect technology — a 9-meter active electrical cable (AEC) capable of transmitting 800G across standard copper. The demonstration will take place in the Marvell booth #B1.

This latest innovation brings data center architecture one step closer to full row-scale AI system design, allowing copper connections that stretch across seven racks - that’s nearly the length of a standard 10-rack row. It builds on the prior achievement by Marvell of a 7-meter AEC demonstrated at OFC 2025, pushing high-speed copper technology even further beyond what was thought possible.

Pushing the Boundaries of Copper

Until now, copper connections in large-scale AI systems have been limited by reach. Traditional electrical cables lose signal quality as distance increases, restricting system architects to a few meters between servers or racks. The 9-meter AEC changes that equation.

By combining high-performance digital signal processing (DSP) with advanced noise reduction and signal integrity engineering, the new design extends copper’s effective range well beyond conventional limits, maintaining clean, low-latency data transfer over distances once thought achievable only with optical fiber.

By Annie Liao, Product Management Director, ODSP Marketing, Marvell

For over 20 years, PCIe, or Peripheral Component Interconnect Express, has been the dominant standard to connect processors, NICs, drives and other components within servers thanks to the low latency and high bandwidth of the protocol as well as the growing expertise around PCIe across the technology ecosystem. It will also play a leading role in defining the next generation of computing systems for AI through increases in performance and combining PCIe with optics.

Here’s why:

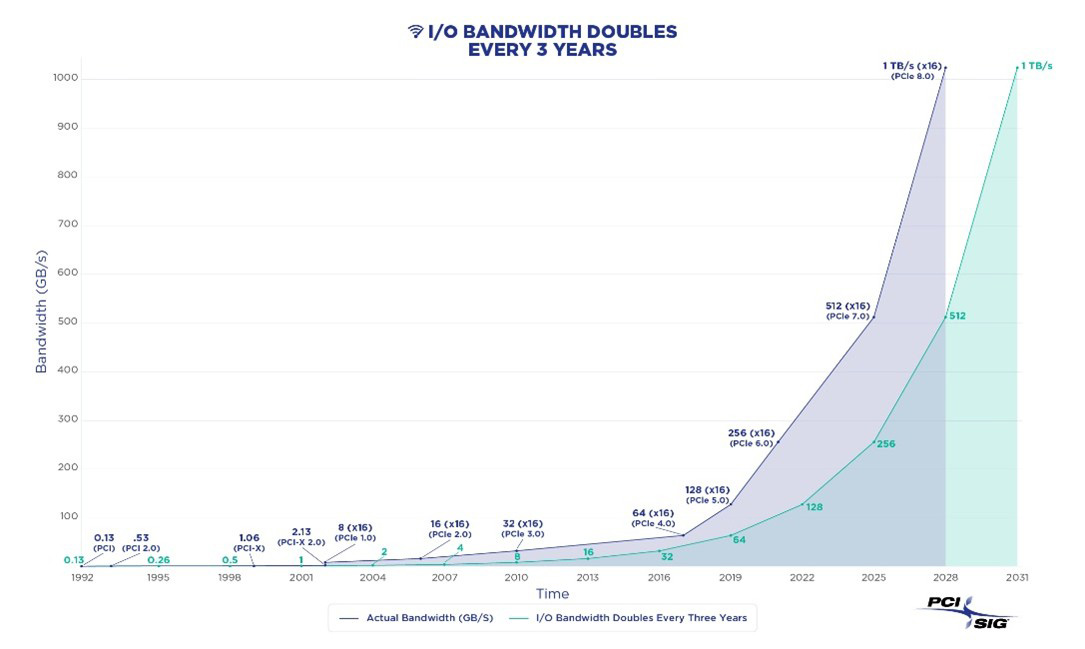

PCIe Transitions Are Accelerating

Seven years passed between the debut of PCIe Gen 3 (8 gigatransfers/second—GT/s) in 2010 and the release of PCIe Gen 4 (16 GT/sec) in 2017.1 Commercial adoption, meanwhile, took closer to a full decade2

Toward a terabit (per second): PCIe standards are being developed and adopted at a faster rate to keep up with the chip-to-chip interconnect speeds needed by system designers.

By Michael Kanellos, Head of Influencer Relations, Marvell

The opportunity for custom silicon isn’t just getting larger – it’s becoming more diverse.

At the Custom AI Investor Event, Marvell executives outlined how the push to advance accelerated infrastructure is driving surging demand for custom silicon – reshaping the customer base, product categories and underlying technologies. (Here is a link to the recording and presentation slides.)

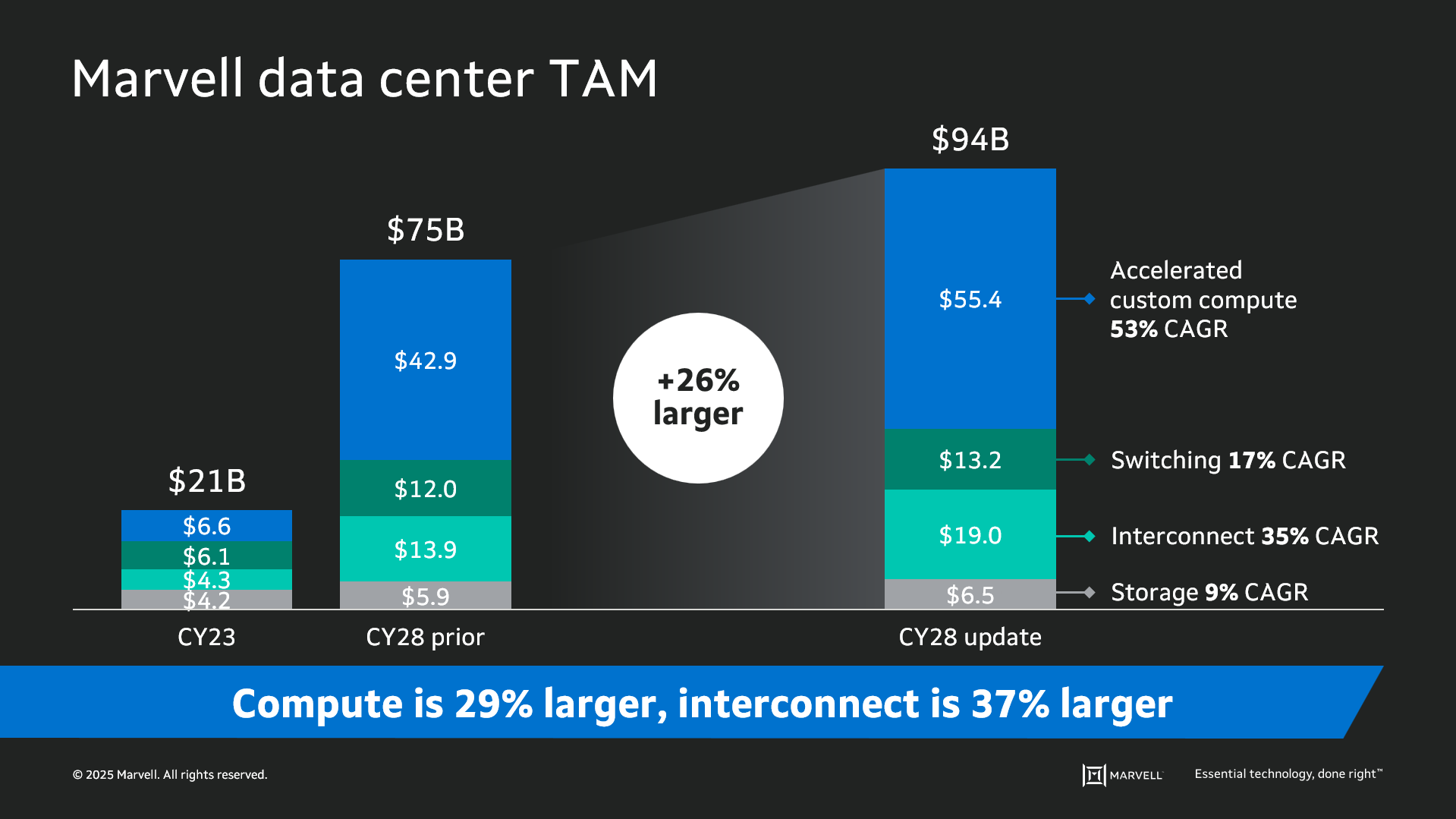

Data infrastructure spending is now slated to surpass $1 trillion by 20281 with the Marvell total addressable market (TAM) for data center semiconductors rising to $94 billion by then, 26% larger than the year before. Of that total, $55.4 billion revolves around custom devices for accelerated compute1. In fact, the forecast for every major product segment has risen in the past year, underscoring the growing momentum behind custom silicon.

The deeper you go into the numbers, the more compelling the story becomes. The custom market is evolving into two distinct elements: the XPU segment, focused on optimized CPUs and accelerators, and the XPU attach segment that includes PCIe retimers, co-processors, CPO components, CXL controllers and other devices that serve to increase the utilization and performance of the entire system. Meanwhile, the TAM for custom XPUs is expected to reach $40.8 billion by 2028, growing at a 47% CAGR1.

By Nicola Bramante, Senior Principal Engineer

Transimpedance amplifiers (TIAs) are one of the unsung heroes of the cloud and AI era.

At the recent OFC 2025 event in San Francisco, exhibitors demonstrated the latest progress on 1.6T optical modules featuring Marvell 200G TIAs. Recognized by multiple hyperscalers for its superior performance, Marvell 200G TIAs are becoming a standard component in 200G/lane optical modules for 1.6T deployments.

TIAs capture incoming optical signals from light detectors and transform the underlying data to be transmitted between and used by servers and processors in data centers and scale-up and scale-out networks. Put another way, TIAs allow data to travel from photons to electrons. TIAs also amplify the signals for optical digital signal processors, which filter out noise and preserve signal integrity.

And they are pervasive. Virtually every data link inside a data center longer than three meters includes an optical module (and hence a TIA) at each end. TIAs are critical components of fully retimed optics (FRO), transmit retimed optics (TRO) and linear pluggable optics (LPO), enabling scale-up servers with hundreds of XPUs, active optical cables (AOC), and other emerging technologies, including co-packaged optics (CPO), where TIAs are integrated into optical engines that can sit on the same substrates where switch or XPU ASICs are mounted. TIAs are also essential for long-distance ZR/ZR+ interconnects, which have become the leading solution for connecting data centers and telecom infrastructure. Overall, TIAs are a must have component for any optical interconnect solution and the market for interconnects is expected to triple to $11.5 billion by 2030, according to LightCounting.