By Kishore Atreya, Senior Director of Cloud Platform Marketing, Marvell

Milliseconds matter.

It’s one of the fundamental laws of AI and cloud computing. Reducing the time required to run an individual workload frees up infrastructure to perform more work, which in turn creates an opportunity for cloud operators to potentially generate more revenue. Because they perform billions of simultaneous operations and operate on a 24/7/365 basis, time literally is money to cloud operators.

Marvell specifically designed the Marvell® Teralynx® 10 switch to optimize infrastructure for the intense performance demands of the cloud and AI era. Benchmark tests show that Teralynx 10 operates at a low and predictable 500 nanoseconds, a critical precursor for reducing time-to-completion.1 The 512-radix design of Teralynx 10 also means that large clusters or data centers with networks built around the device (versus 256-radix switch silicon) need up to 40% fewer switches, 33% fewer networking layers and 40% fewer connections to provide an equivalent level of aggregate bandwidth.2 Less equipment, of course, paves the way for lower costs, lower energy and better use of real estate.

Recently, we also teamed up with Keysight to provide deeper detail on another crucial feature of critical importance: auto-load balancing (ALB), or the ability of Teralynx 10 to even out traffic between ports based on current and anticipated loads. Like a highway system, spreading traffic more evenly across lanes in networks prevents congestion and reduces cumulative travel time. Without it, a crisis in one location becomes a problem for the entire system.

Better Load Balancing, Better Traffic Flow

To test our hypothesis of utilizing smarter load balancing for better load distribution, we created a scenario with Keysight AI Data Center Builder (KAI DC Builder) to measure port utilization and job completion time across different AI collective workloads. Built around a spine-leaf topology with four nodes, KAI DC Builder supports a range of collective algorithms, including all-to-all, all-reduce, all-gather, reduce-scatter, and gather. It facilitates the generation of RDMA traffic and operates using the RoCEv2 protocol. (In lay person’s terms, KAI DC Builder along with Keysight’s AresONE-M 800GE hardware platform enabled us to create a spectrum of test tracks.)

For generating AI traffic workloads, we used the Keysight Collective Communication Benchmark (KCCB) application. This application is installed as a container on the server, along with the Keysight provided supportive dockers..

In our tests, Keysight AresONE-M 800GE was connected to a Teralynx 10 Top-of-Rack switch via 16 400G OSFP ports. The ToR switch in turn was linked to a Teralynx 10 system configured as a leaf switch. We then measured port utilization and time-of-completion. All Teralynx 10 systems were loaded with SONiC.

By Ravindranath C Kanakarajan, Senior Principal Engineer, Switch BU

Marvell has been actively involved with SONiC since its beginning, with many SONiC switches powered by Marvell® ASICs at hyperscalers deployed worldwide. One of Marvell's goal has been to enhance SONiC to address common issues and optimize its performance for large-scale deployments.

The Challenge

Many hackathon projects have focused on improving the monitoring, troubleshooting, debuggability, and testing of SONiC. However, we believe one of the core roles of a network operating system (NOS) is to optimize the use of the hardware data plane (i.e., the NPUs and networking ASICs). As workloads become increasingly more demanding, it becomes crucial to maximize the efficiency of the data plane. Commercial black-box NOS are tailored to specific NPUs/ASICs to achieve optimal performance. SONiC, however, supports a diverse range of NPUs/ASICs, presenting a unique challenge.

We at Marvell have been contributing features to SONiC to ensure optimal use of the underlying networking ASIC resources. Over time, we’ve recognized the need to provide operators with flexibility in utilizing ASIC resources while reducing the platform-specific complexity gradually being introduced into SONiC’s core component, the Orchagent. This approach will help SONiC operators to maintain consistent device configurations even when using devices from different platform vendors.

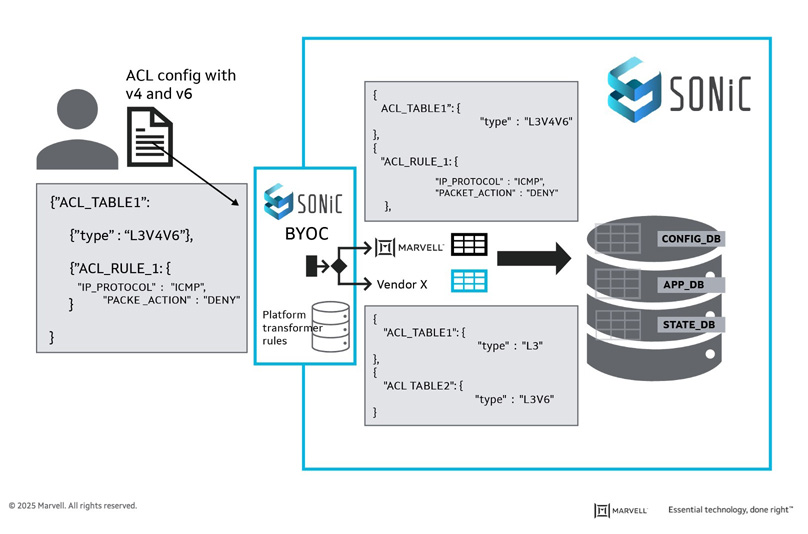

BYOC

During the Hackathon, we developed a framework called “BYOC: Bring Your Own Configuration,” allowing networking ASIC vendors to expose their hardware capabilities in a file describing intent. A new agent transforms the user’s configuration into an optimal SONiC configuration based on the capabilities file. This approach allows ASIC vendors to ensure that user configurations are converted to optimal ASIC configurations. It also allows SONiC operators to fine-tune the hardware resources consumed based on the deployment needs. It further helps in optimally migrating configurations from vendor NOS to SONiC based on the SONiC platform’s capability.

By Amit Sanyal, Senior Director, Product Marketing, Marvell

If you’re one of the 100+ million monthly users of ChatGPT—or have dabbled with Google’s Bard or Microsoft’s Bing AI—you’re proof that AI has entered the mainstream consumer market.

And what’s entered the consumer mass-market will inevitably make its way to the enterprise, an even larger market for AI. There are hundreds of generative AI startups racing to make it so. And those responsible for making these AI tools accessible—cloud data center operators—are investing heavily to keep up with current and anticipated demand.

Of course, it’s not just the latest AI language models driving the coming infrastructure upgrade cycle. Operators will pay equal attention to improving general purpose cloud infrastructure too, as well as take steps to further automate and simplify operations.

To help operators meet their scaling and efficiency objectives, today Marvell introduces Teralynx® 10, a 51.2 Tbps programmable 5nm monolithic switch chip designed to address the operator bandwidth explosion while meeting stringent power- and cost-per-bit requirements. It’s intended for leaf and spine applications in next-generation data center networks, as well as AI/ML and high-performance computing (HPC) fabrics.

A single Teralynx 10 replaces twelve of the 12.8 Tbps generation, the last to see widespread deployment. The resulting savings are impressive: 80% power reduction for equivalent capacity.

Copyright © 2026 Marvell, All rights reserved.