- PRODUCTS

- COMPANY

- SUPPORT

- PRODUCTS

- BY TYPE

- BY MARKET

- COMPANY

- SUPPORT

How AI Will Drive Cloud Switch Innovation

This article is part five in a series on talks delivered at Accelerated Infrastructure for the AI Era, a one-day symposium held by Marvell in April 2024.

AI has fundamentally changed the network switching landscape. AI requirements are driving foundational shifts in the industry roadmap, expanding the use cases for cloud switching semiconductors and creating opportunities to redefine the terrain.

Here’s how AI will drive cloud switching innovation.

A changing network requires a change in scale

In a modern cloud data center, the compute servers are connected to themselves and the internet through a network of high-bandwidth switches. The approach is like that of the internet itself, allowing operators to build a network of any size while mixing and matching products from various vendors to create a network architecture specific to their needs.

Such a high-bandwidth switching network is critical for AI applications, and a higher-performing network can lead to a more profitable deployment.

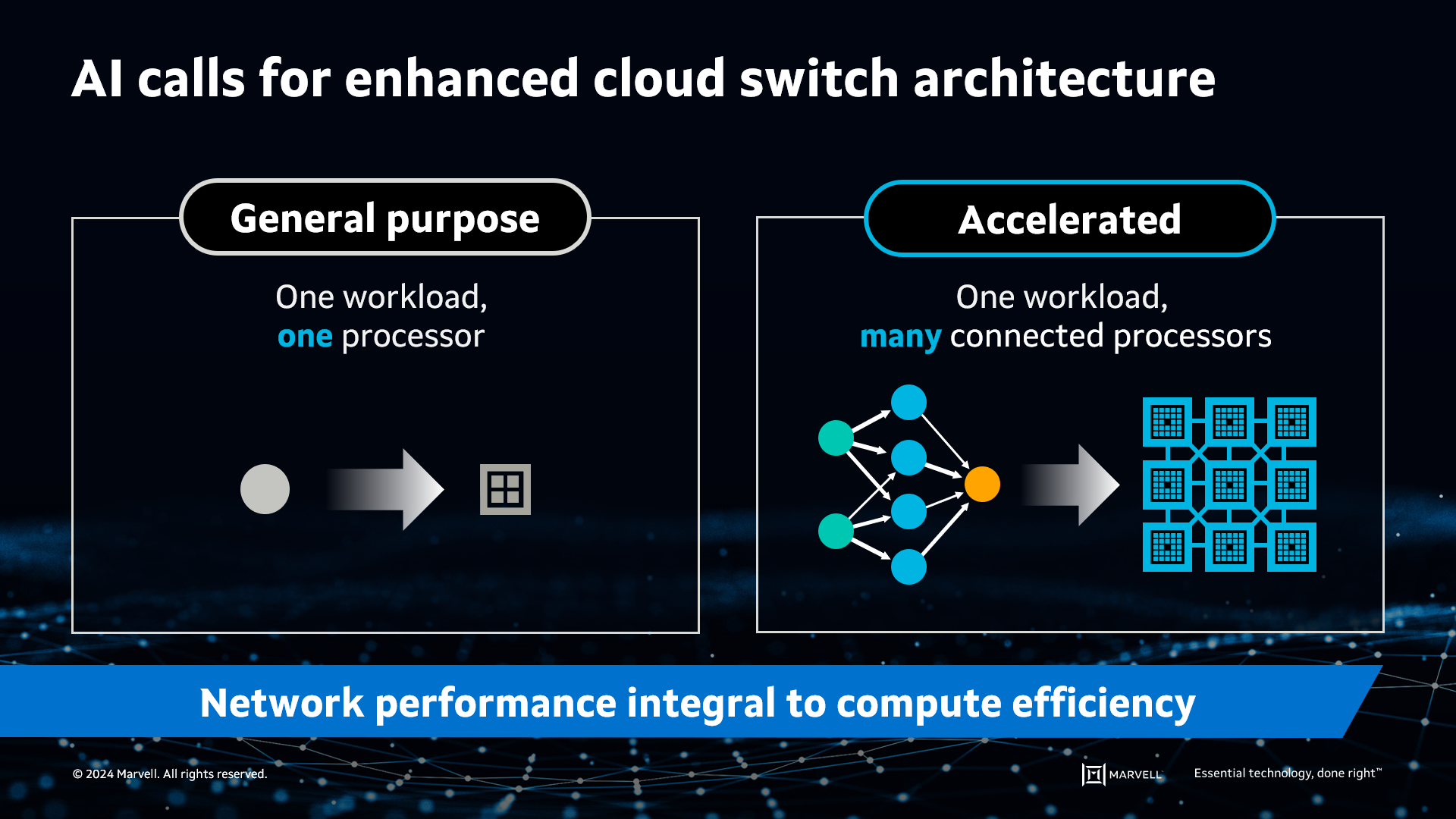

However, expanding and extending the general-purpose cloud network to AI isn’t quite as simple as just adding more building blocks. In the world of general-purpose computing, a single workload or more can fit on a single server CPU. In contrast, AI’s large datasets don’t fit on a single processor, whether it’s a CPU, GPU or other accelerated compute device (XPU), making it necessary to distribute the workload across multiple processors. These accelerated processors must function as a single computing element.

AI requires accelerated infrastructure to split workloads across many processors.

For that to happen, the network responsible for splitting the AI workload across these thousands (or more) of accelerators must be of much higher capacity than the network used for general-purpose computing, and it must have predictable latency.

To get a sense of the capacity requirements, consider that in the frontend network of an AI data center, each AI accelerator has two-to-three times as many ports allocated to it as compared to the general-purpose processor case—and that ratio is expected to grow. The backend network must scale on a completely different, and steeper, growth curve, which requires a dedicated switching fabric to connect AI clusters.

How Marvell is meeting the needs of tomorrow—today

Network switches are one of the semiconductor industry’s most complex devices. They require the most advanced process technology, hundreds of high-speed serial interfaces, and an architecture that strikes a balance between features, high capacity, low latency and power-efficient implementation. Add to that the packet buffers, route tables and telemetry that cloud operators need to route, observe and react to traffic patterns across the network.

With AI, the switching stakes are even higher—and Marvell is one of the few companies that is prepared to meet the evolving needs of AI and the market.

“AI has triggered a rapid expansion in the market for cloud switching semiconductors,” said Nick Kucharewski, SVP and GM of Switching at Marvell. “Marvell has assembled one of the few teams in the industry that’s demonstrated the ability to deliver this class of products.”

In 2021, Marvell acquired Innovium, which had developed a clean-sheet cloud switching architecture capable of meeting hyperscale data center requirements. This served as the foundation for the Marvell® Teralynx® 10 AI cloud network switch, which uses 5nm core process IP with 100G I/O and 51 Tbps of switching capacity.

A roadmap for AI cloud switching

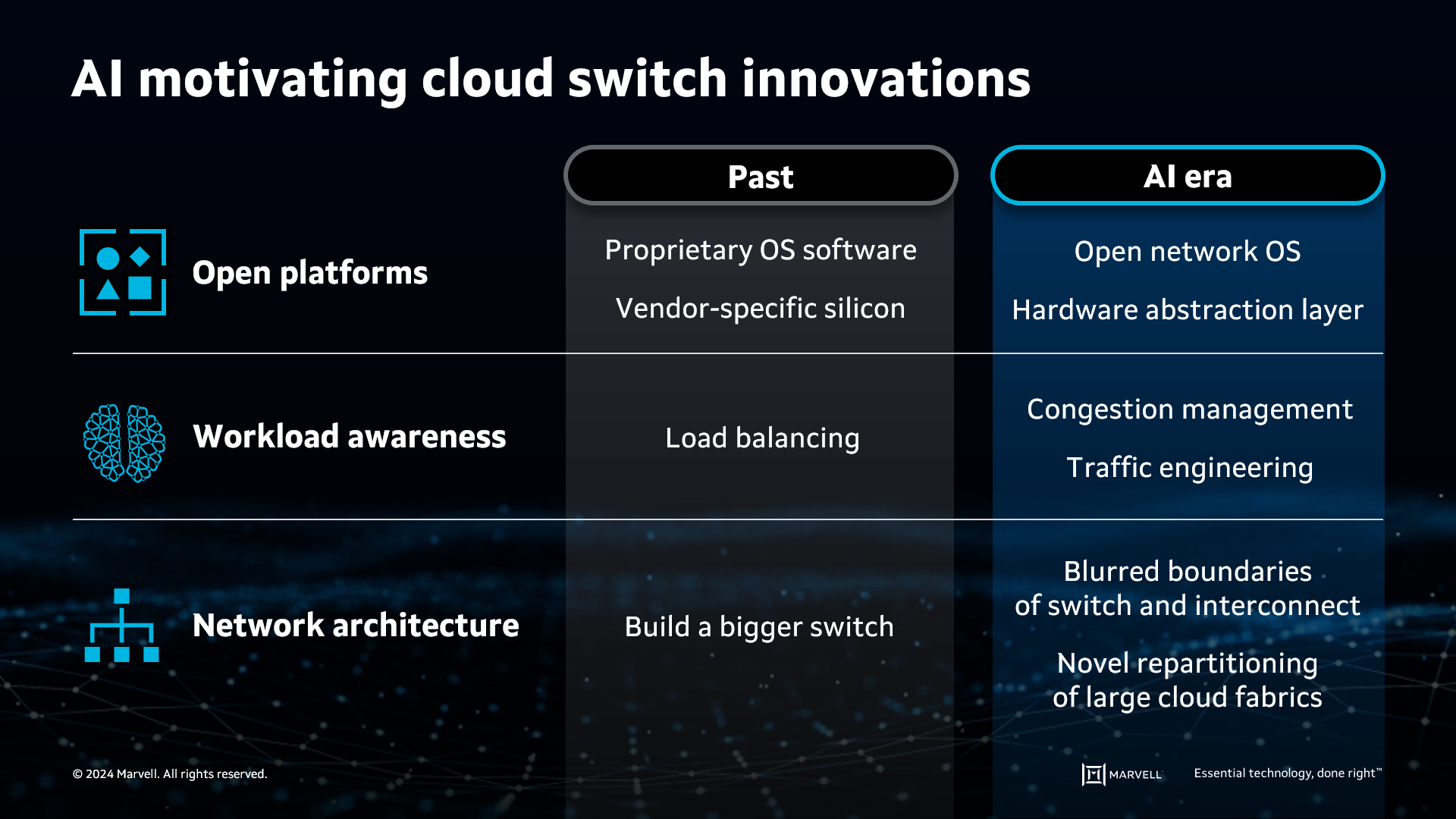

AI switching fabrics will continue to evolve and change to scale with AI applications. Marvell sees three ways AI will change cloud switching:

- Open platforms: In the past, it was impractical for an operator to target more than one chipset for its network switching equipment as that equipment was built with proprietary network software and optimized for single-vendor silicon. Today, an open network OS (SONiC), combined with hardware abstraction layers that normalize differences in silicon implementations, allows equipment vendors to build more platforms faster. This enables operators to transition to the Marvell chipset with faster development timelines and lower development costs than previously.

- Workload awareness: The next wave will see innovations moving from the management orchestration layer down to the network layer, where the network will become involved in the decision of where to place computing tasks within the cloud. In addition, there will be more sophisticated traffic engineering techniques to reduce interactions across the fabric.

- Network architecture: AI will influence the architecture of the network. In the past year, this has happened inside the compute cluster with system partitioning and connectivity that redraw the boundaries of the chipset. The next step is to apply this thinking to the fabric, which will blur the boundaries of switching and connectivity, ultimately repartitioning these large fabrics in new ways.

AI will drive cloud switch innovation in open platforms, workload awareness, and network architecture.

As technology inflections push the market forward, Marvell will continue to expand its silicon and software roadmap to address market needs today and tomorrow.

“The requirements for AI drive shifts in the industry roadmap, which create new opportunities for product innovation,” said Kucharewski. “Marvell has the essential portfolio technology that enables us to innovate and lead in the next wave of the market.”

# # #

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events, results or achievements. Actual events, results or achievements may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Tags: AI, AI infrastructure, Data Center Interconnects, Data Center Interconnect Solutions, network switches

Recent Posts

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

- Improving AI Through CXL Part II: Lower Latency

Archives

Categories

- 5G (10)

- AI (51)

- Cloud (23)

- Coherent DSP (11)

- Company News (108)

- Custom Silicon Solutions (11)

- Data Center (76)

- Data Processing Units (21)

- Enterprise (24)

- ESG (12)

- Ethernet Adapters and Controllers (11)

- Ethernet PHYs (3)

- Ethernet Switching (41)

- Fibre Channel (10)

- Marvell Government Solutions (2)

- Networking (45)

- Optical Modules (20)

- Security (6)

- Server Connectivity (36)

- SSD Controllers (6)

- Storage (23)

- Storage Accelerators (4)

- What Makes Marvell (47)

Copyright © 2026 Marvell, All rights reserved.

- Terms of Use

- Privacy Policy

- Contact