You’re likely assaulted daily with some zany and unverifiable AI factoid. By 2027, 93% of AI systems will be able to pass the bar, but limit their practice to simple slip and fall cases! Next-generation training models will consume more energy than all Panera outlets combined! etc. etc.

What can you trust? The stats below. Scouring the internet (and leaning heavily on 16 years of employment in the energy industry) I’ve compiled a list of somewhat credible and relevant stats that provide perspective to the energy challenge.

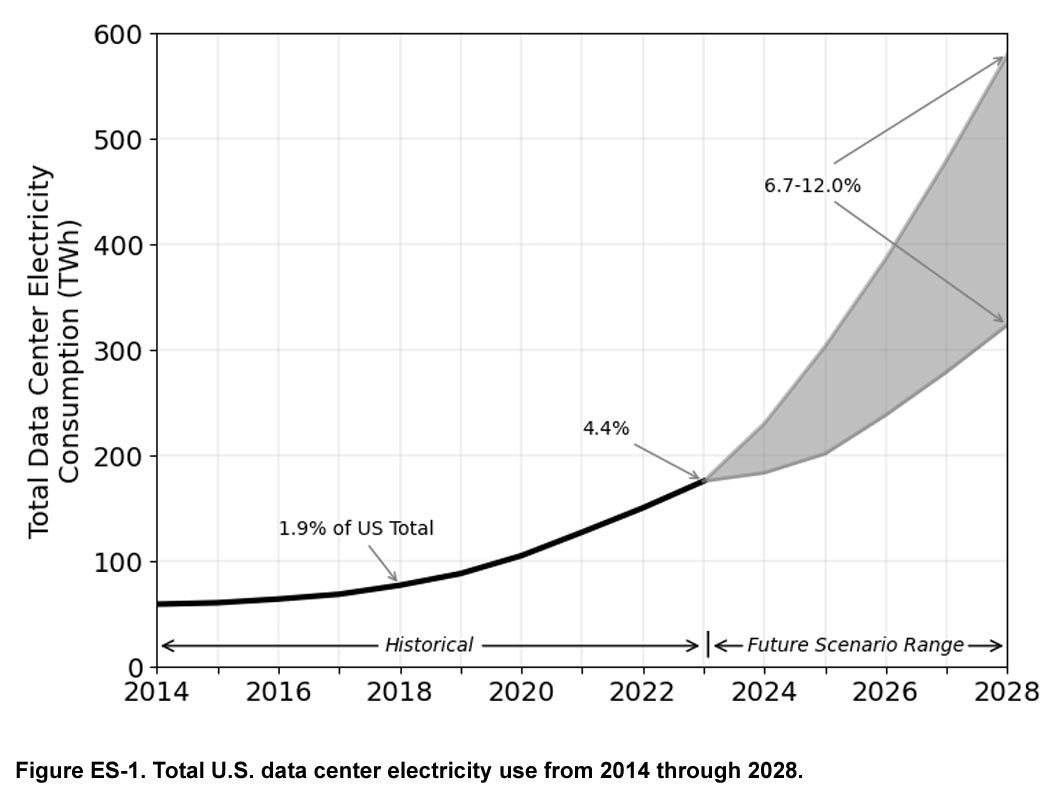

1. First, the Concerning News: Data Center Demand Could Nearly Triple in a Few Years

Lawrence Livermore National Lab and the Department of Energy1 has issued its latest data center power report and it’s ominous.

Data center power consumption rose from a stable 60-76 terawatt hours (TWh) per year in the U.S. through 2018 to 176 TWh in 2023, or from 1.9% of total power consumption to 4.4%. By 2028, AI could push it to 6.7%-12%. (Lighting consumes 15%2.)

Report co-author Eric Masanet adds that the total doesn’t include bitcoin, which increases 2023’s consumption by 70 TWh. Add a similar 30-40% to subsequent years too if you want.

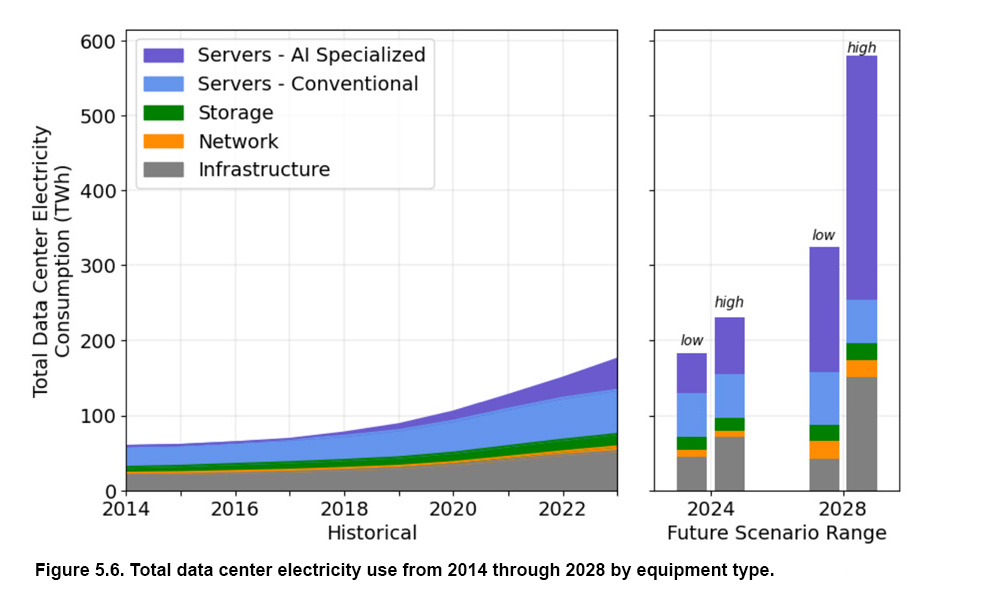

2. Power Is Rising Across Infrastructure

Servers and infrastructure account for most of the growth.

Above graphs: Lawrence Livermore National Laboratory

Some of the surprises in the Livermore report: while average power per hard drive dropped from 8.6 watts to 6.4 watts between 2015 and 2024, it rose from 6 to 11 watts with SSDs because of higher capacity and performance demands.

Network power consumption, meanwhile, is slated to nearly double to 23 TWh in 2028, or 12% of the total. 200/400G Ethernet accounts for 26% of energy consumption in 2028 while InfiniBand is responsible for 45%.

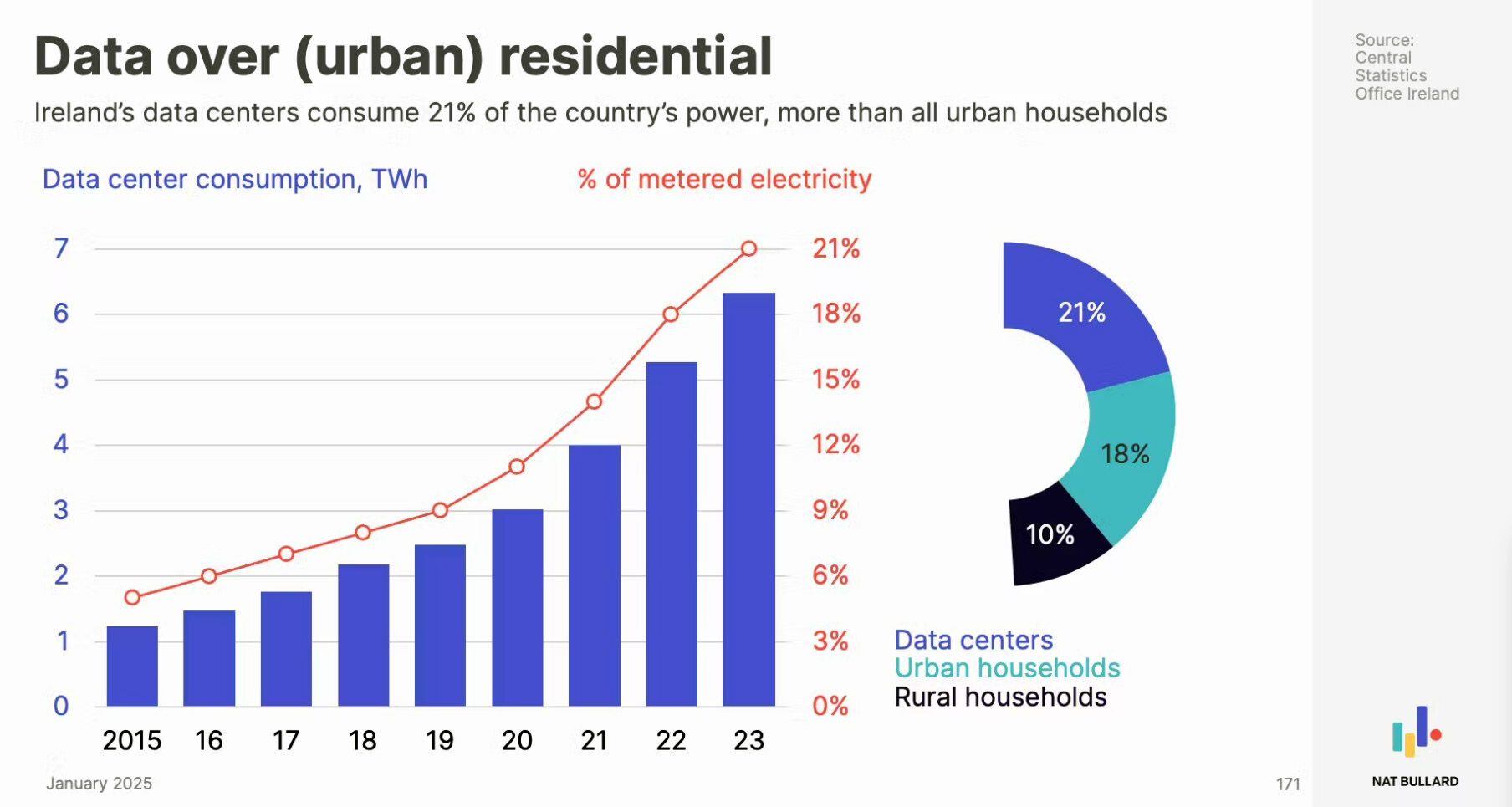

3. The Power Crunch Is Especially Bad in Some Locations

Like Ireland, where the percentage of power consumed by data centers has zipped to 21% says the Central Statistics Office of Ireland, prompting a halt on new permits.

4. So Expect Arguments Over Who Pays for Upgrades

New power infrastructure could require $2 trillion by 2030, says Bain & Company.3 Typically, grid upgrades get spread across the entire user base. American Electric Power in Ohio is arguing for rate structures that would require data center operators to shoulder more of the pain of these upgrades through minimum purchase requirements or other vehicles,4 potentially increasing the costs on data centers.

Central Ohio, AEP notes, is on track to consume as much power as Manhattan by 2030 thanks to data center capacity.

5. Water Consumption Is Also Rising

Training ChatGPT3 in state-of-the-art data centers likely required around 5 million liters of water with 700,000 of those liters ultimately evaporating5. (The remaining 4.3 million liters get recycled.) By 2027, water withdrawals for AI could rise to 4.2 to 6.6 billion cubic meters, roughly 2x-3x today. That would be 6x the water withdrawn in Denmark and about half of what the UK uses. With 4 billion people across the globe facing water scarcity, it’s not a good look.

6. But Now the Better News: Efficiency (Historically) Works Wonders

Between 2000 and 2005, U.S. data center power consumption rose 90% per year. Between 2005 and 2010 it climbed 24% annually. But between 2010 and 2018, the years of explosive growth in smartphones, cloud services and the streaming media, it barely budged6. Why the sudden change? Smarter cooling, more efficient chip designs, more extensive use of virtualization and better data center housekeeping.

New technologies—liquid cooling, custom processors, co-packaged optics—will further flatten the curve as performance demands grow. Application efficiency—see DeepSeek—will paya role as well. The exact savings from each technology will be determined but early results with custom AI chips shows a 30% plus improvement in price performance7.

7. AI Power Consumption Is Comparatively Small

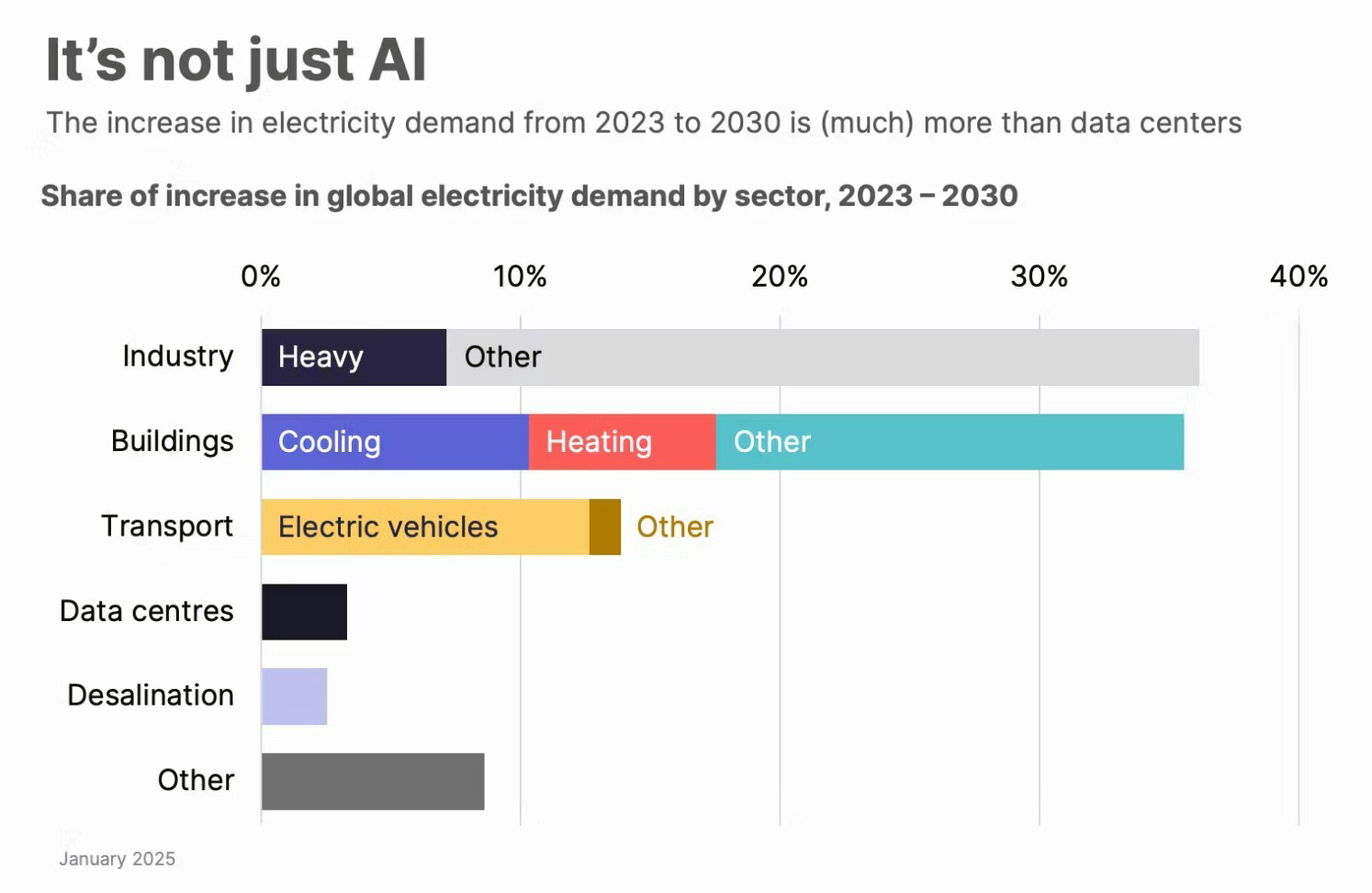

Check out the chart from Nat Bullard on the International Energy Agency on power’s anticipated growth across industries? Only desalination contributes less. As a result, other industries will be funding grid upgrades too.

8. Solar and Microgrids Look Viable

While nuclear grabs the headlines, it suffers from a few drawbacks: projects can take decades and massive amounts of capital.

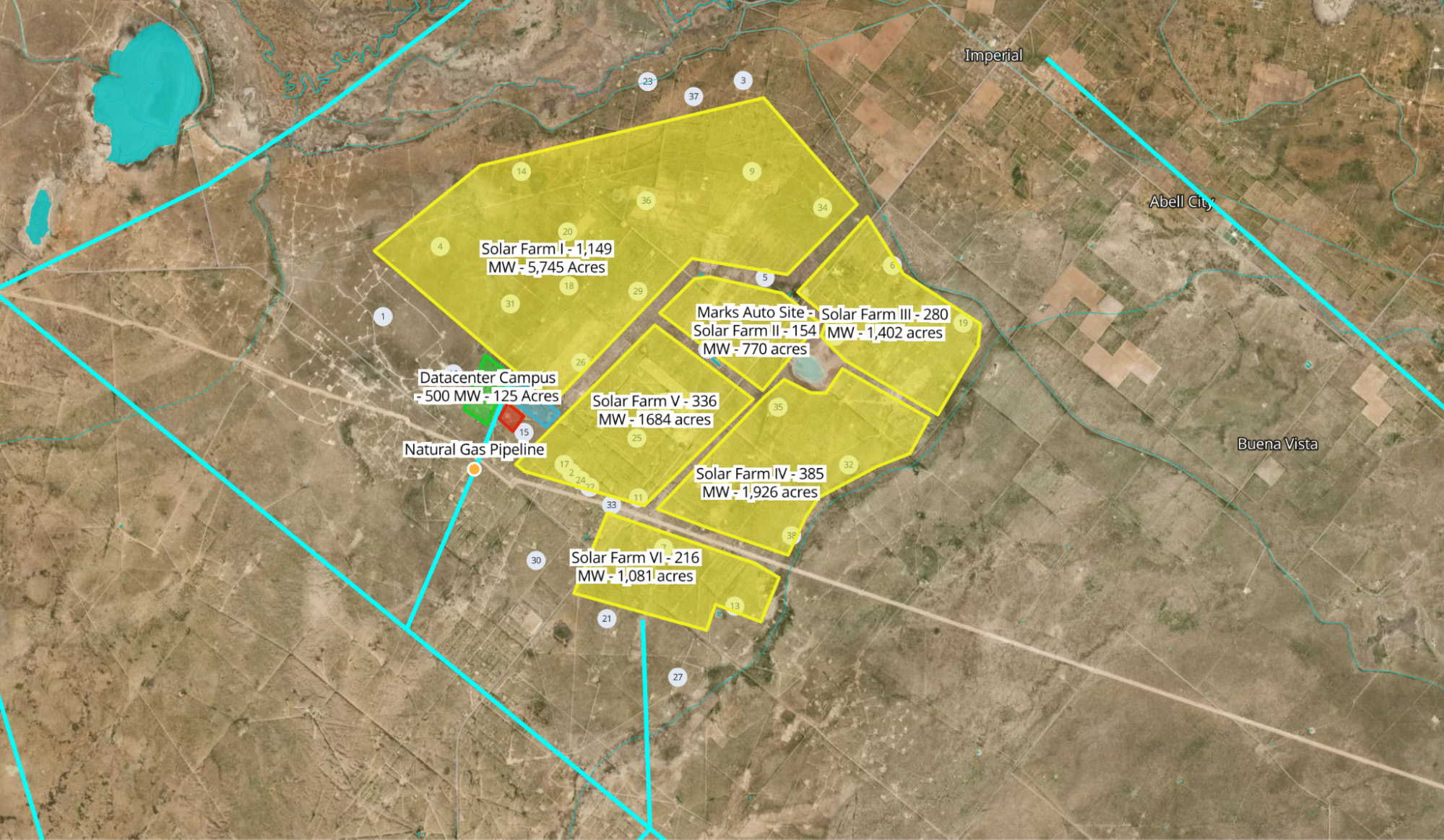

A large solar microgrid—i.e. 100MW to 500MW worth of solar and storage capacity within ten miles of each other bound together on its own intelligent grid--can be built in two to four years. A 90% renewables/10% gas microgrid could deliver power for $109 per megawatt hour, less than recommissioned nuclear ($130/MWh).8

A 500MW site cover around 20,000 acres with 12,000 of them going to solar, 80 acres for storage and 1.25 acres for the data center. Across the western U.S., close to 32 million acres for this kind of development are available.8

Added bonus: by putting generation next to the data center, you avoid the 4% losses that come through transmission and distribution of power.

Image courtesy of the PACES project and Offgrid AI

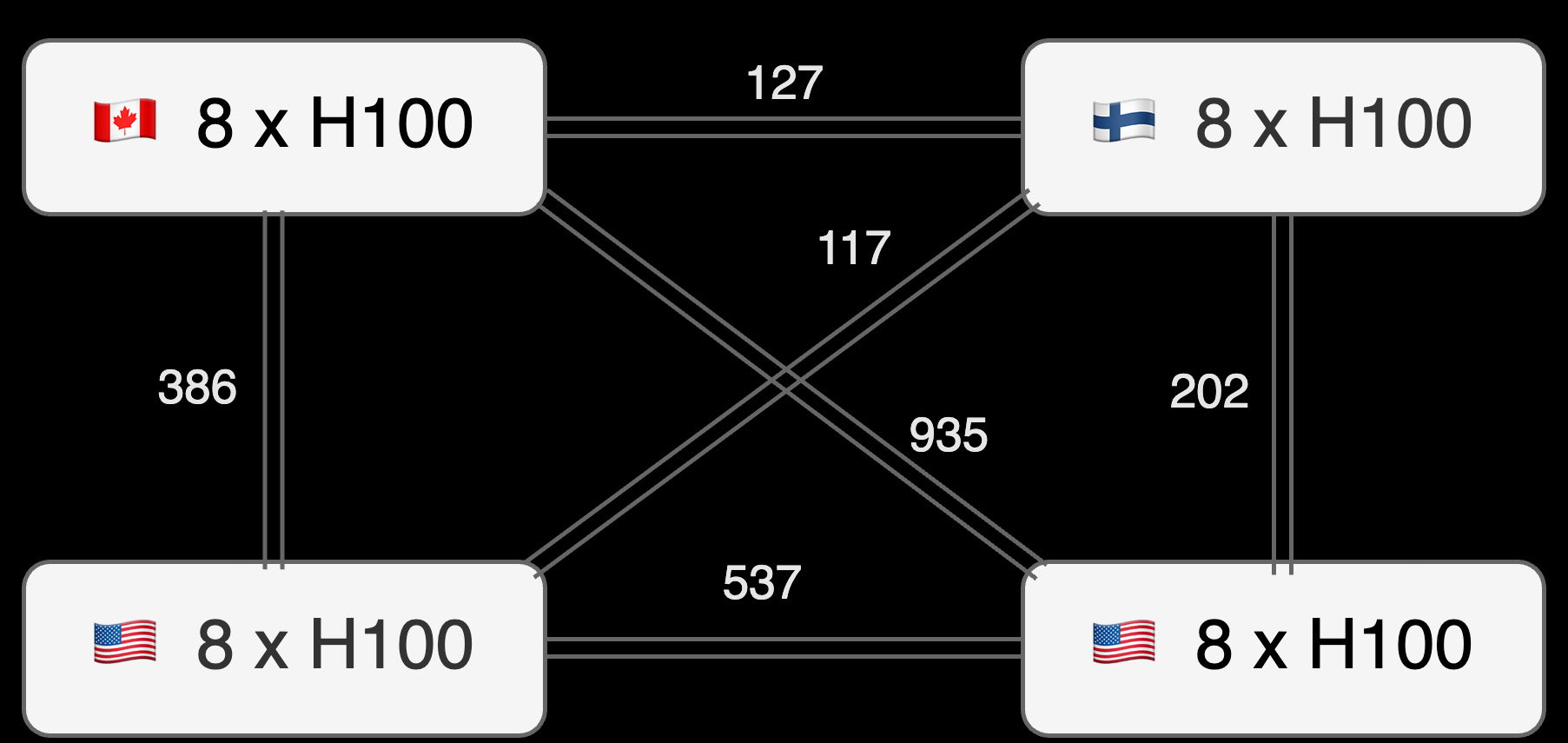

9. Virtual Hyperscalers Look Viable Too Distributing workloads across smaller, modular data centers can reduce strain on the grid and accelerate the pace of infrastructure build-outs. Prime Intellect and OpenDiLoCo9 have shown that large training jobs can be split among smaller data centers located in different continents while still maintaining 90% plus compute utilization. Open DiLoCo also performed training with slower communications and fewer messages between the nodes. The focus of the project was more on training than energy, but it helps prove out the case of distributed AI.

The image shows average bidirectional bandwidth in a decentralized training setup spanning Canada, Finland and two locations in the U.S. Image courtesy of Prime Intellect - Decentralized Training, INTELLECT-1 and Alex Ferguson.

10. AI Can Offset Emissions Elsewhere

The World Economic Forum has stated that digital technology has the potential to reduce worldwide emissions by 20% by 205010. Data centers are situated to play a leading role by managing the algorithms for fine-tuning consumption in factories, buildings, homes and other places.

With AI, the data for a full cost/benefit analysis of AI at this point is still not fully baked, but the thesis remains the same: AI can conserve resources in unobtrusive ways that we might otherwise not be able to do.

1. LBL. December 2024.

2. Fraunhofer Institute for Building Physics.

3. Bain & Co.

4. Utility Dive Jan 2025.

5. UC Riverside and UT Arlington, Oct 2023.

6. LBL. 2016.

7. Data Center Knowledge, December 2024.

8. Fast, Scalable, Clean and Cheap Enough: How Off-Grid Solar Microgrids Can Power the AI Race. December 2024. Image Courtesy of the PACES project.

9. Prime Intellect, July 2024.

# # #

Marvell and the M logo are trademarks of Marvell or its affiliates. Please visit www.marvell.com for a complete list of Marvell trademarks. Other names and brands may be claimed as the property of others.

This blog contains forward-looking statements within the meaning of the federal securities laws that involve risks and uncertainties. Forward-looking statements include, without limitation, any statement that may predict, forecast, indicate or imply future events or achievements. Actual events or results may differ materially from those contemplated in this blog. Forward-looking statements are only predictions and are subject to risks, uncertainties and assumptions that are difficult to predict, including those described in the “Risk Factors” section of our Annual Reports on Form 10-K, Quarterly Reports on Form 10-Q and other documents filed by us from time to time with the SEC. Forward-looking statements speak only as of the date they are made. Readers are cautioned not to put undue reliance on forward-looking statements, and no person assumes any obligation to update or revise any such forward-looking statements, whether as a result of new information, future events or otherwise.

Recent Posts

- Marvell Named to America’s Best Midsize Employers 2026 Ranking

- Ripple Effects: Why Water Risk Is the Next Major Business Challenge for the Semiconductor Industry

- Boosting AI with CXL Part III: Faster Time-to-First-Token

- Marvell Wins Interconnect Product of the Year for Ara 3nm 1.6T PAM4 DSP

- Improving AI Through CXL Part II: Lower Latency